Contacts with SUN/ORACLE for Hardware Problems

This page is OBSOLETE because apart from our WNs all the SUN HW is out of warranty.

To still get statistics about the swapped 1TB broken disks you can run this pipe inside

t3nagios:

[root@t3nagios ~]#

grep --color "replace data1" /opt/nagios-3.2.3/var/log/nagios/status.dat -B 11 | grep -e "zpool replace data1" -e entry_time -e host_name| awk 'BEGIN { FS="="; }{ if ( $2 ~ /^[0-9][0-9]+/ ) { cmd="date -d @"$2; ( cmd | getline result ); printf result" "; next; }; if ($2 ~ /replace/) {print $2 ;} else printf $2" "; }'

t3fs11 Thu Aug 25 10:35:20 CEST 2011 changed the 2 broken disks, then I ran zpool replace data1 c3t7d0 && zpool replace data1 c2t1d0

t3fs09 Sun Aug 28 21:55:58 CEST 2011 Broken disk, Oracle SR 3-4385711041 created then $ zpool offline data1 c5t7d0 $ cfgadm -c unconfigure c5::dsk/c5t7d0 $ zpool replace data1 c5t7d0 c6t7d0

t3fs09 Mon Aug 29 14:35:04 CEST 2011 zpool replace data1 c5t7d0

t3fs09 Tue Nov 22 15:30:09 CET 2011 zpool replace data1 c3t0d0

t3fs11 Fri Nov 25 14:13:03 CET 2011 zpool replace data1 c6t0d0

t3fs07 Tue Nov 29 11:42:58 CET 2011 zpool replace data1 c2t3d0

t3fs07 Tue Nov 29 11:43:21 CET 2011 zpool replace data1 c2t3d0

t3fs09 Mon Jan 2 21:57:51 CET 2012 zpool replace data1 c5t5d0 c6t7d0

t3fs09 Tue Jan 3 14:18:09 CET 2012 zpool replace data1 c5t5d0

t3fs09 Tue Jan 3 14:28:50 CET 2012 zpool replace data1 c5t5d0

t3fs11 Mon Jan 16 11:57:18 CET 2012 zpool replace data1 c6t2d0

t3fs11 Wed Feb 1 12:16:20 CET 2012 zpool replace data1 c1t1d0

t3fs09 Tue Mar 27 10:29:48 CEST 2012 zpool replace data1 c1t6d0

t3fs09 Mon Apr 23 16:34:43 CEST 2012 zpool replace data1 c2t2d0

t3fs10 Tue Apr 24 10:30:59 CEST 2012 zpool replace data1 c2t1d0

t3fs09 Fri May 11 10:37:41 CEST 2012 zpool replace data1 c4t3d0

t3fs08 Mon May 14 13:10:47 CEST 2012 zpool replace data1 c2t3d0

t3fs08 Tue May 15 11:37:01 CEST 2012 zpool replace data1 c6t3d0

t3fs11 Thu May 17 10:10:26 CEST 2012 zpool replace data1 c4t2d0 c6t5d0

t3fs11 Thu May 17 10:16:57 CEST 2012 zpool replace data1 c4t2d0 c6t5d0

t3fs11 Fri May 18 09:58:10 CEST 2012 zpool replace data1 c4t2d0

t3fs09 Wed May 23 09:51:54 CEST 2012 zpool replace data1 c1t2d0

t3fs10 Thu May 31 17:45:33 CEST 2012 zpool replace data1 c5t7d0

t3fs11 Mon Jun 11 11:14:02 CEST 2012 zpool replace data1 c2t0d0

t3fs07 Mon Jun 11 11:14:46 CEST 2012 zpool replace data1 c5t2d0

t3fs07 Mon Jun 11 11:16:36 CEST 2012 zpool replace data1 c5t2d0

t3fs10 Thu Jun 21 13:32:27 CEST 2012 zpool replace data1 c1t5d0

t3fs09 Thu Jul 5 11:42:39 CEST 2012 zpool replace data1 c3t3d0

t3fs11 Thu Jul 5 13:20:03 CEST 2012 zpool replace data1 c1t3d0

t3fs09 Wed Jul 11 10:56:20 CEST 2012 zpool replace data1 c1t7d0

t3fs10 Thu Aug 23 11:45:57 CEST 2012 zpool replace data1 c1t3d0

t3fs09 Fri Aug 31 13:18:27 CEST 2012 zpool replace data1 c6t2d0

t3fs08 Wed Sep 5 10:50:43 CEST 2012 zpool replace data1 c2t7d0

t3fs09 Mon Sep 10 09:47:56 CEST 2012 zpool replace data1 c2t0d0

t3fs10 Tue Sep 11 10:55:41 CEST 2012 Because I have 2 broken disks in raid6 I'll rebuild the raid 1 by 1, so first disk: zpool replace data1 c5t4d0

t3fs10 Wed Sep 12 10:48:27 CEST 2012 today I rebuild the 2nd disk: zpool replace data1 c2t3d0

t3fs10 Wed Sep 19 10:31:19 CEST 2012 zpool replace data1 c4t7d0

t3fs11 Mon Sep 24 09:55:35 CEST 2012 zpool replace data1 c1t5d0

t3fs11 Tue Sep 25 12:06:41 CEST 2012 zpool replace data1 c2t7d0

t3fs08 Thu Sep 27 18:27:54 CEST 2012 zpool replace data1 c1t7d0 c6t6d0

t3fs08 Fri Sep 28 10:10:49 CEST 2012 zpool replace data1 c1t7d0

t3fs11 Mon Oct 8 11:09:11 CEST 2012 zpool replace data1 c2t6d0

t3fs08 Mon Oct 8 11:12:14 CEST 2012 zpool replace data1 c6t4d0

t3fs07 Thu Oct 11 11:11:03 CEST 2012 zpool replace data1 c1t6d0

How to get in touch with ORACLE

We need to provide for every case

- contract number

- serial number / software license

- site, contact (PSI Tier-3, your name and best use the list address cms-tier3@lists.psi.ch)

- hardware or software problem

- priority

- system down

- system impaired

- system operation normal

- case number

You may be asked to run SUN Explorer, a data collection utility from SUN (refer to

this page for instructions)

The

case number is the receipt for the service request. We need this key reference available for all questions and activities concerning this case.

When returning a replaced item to ORACLE through DHL, you should notify

brulcscsunvas@dhl.com with the Waybill number (10 digit number on top of DHL form), or they will call anyways one or two days later to ask whether the item has been returned.

Relevant Contract IDs

| Oracle support ID |

System HW |

contract end date |

| 16990133 |

sun blade 6000 system |

2012-12-16 |

| 16982009 |

Sun Fire X4150 |

2011-05-27 |

| 16913881 |

X4500 |

2011-04-27 |

| 16892755 |

*Sun Storage 6140???*, Blade Servers X6270, X4540 |

2012-12-13 |

Sequence to follow for repair cases and the returning of parts through DHL

- If a technician has to come, ORACLE will usually ship a replacement part per DHL to PSI. Note that the DHL car usually will arrive around noon at PSI, so there is no sense in arranging an appointment with the ORACLE technician in the morning, because the replacement part may not be here, yet.

- If the repair can be carried out by us, we will receive just the replacement parts. The broken parts need to be sent back by DHL.

- This involves filling out an overcomplicated return tag that is actually meant for usage by their own repair personnel (Copy from the templates in the T3 folder).

- Put the filled out tag into the box together with the return part

- on the outside of the box you put one of the "red return part stickers".

- Fill out the DHL return form according to the templates in the T3 folder

- note down the DHL Waybill number (number on top of the DHL form)

- Put the DHL return form and all copies into the transparent, sticking envelop and glue it onto the package. Do not seal the envelope, because our Warenannahme will need to take out one of the copies (this will be sent back to us, when DHL collects the package and signs it).

- The DHL return package needs to be sent to the PSI "Warenannahme" by internal post first (taking half a day). DHL should be ordered to come for picking up the parcel at a time where it already is ready at the Warenannahme (I pick usually the next day).

- Call DHL for telling them about a packet that will be ready to collect at that time (when asked about the address, be prepared to explain to them every time that PSI is a campus, has its own postal number, and no street name).

- Notify brulcscsunvas@dhl.com with the Waybill number (10 digit number on top of DHL form), or they will call anyways one or two days later to ask whether the item has been returned.

Individual Cases

CPU or DIMM failure on t3wn08

- Host Serial number: 0822QBR008

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 1 (system down).

- Case number: 38163150 (later 38177232)

- SUN technician: Oliver Mundt

ELOM log excerpt:

Show

Show  Hide

Hide

Severity Time Stamp Description

-------------------------------------------------------------------------------------------

Warning 1970/01/01 00:00:00 Power Supply 1 input out-of-range.but present assert

Nonrecoverable 2008/07/23 23:09:55 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:09:56 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:09:57 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/07/23 23:09:58 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/07/23 23:09:58 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:09:59 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:10:00 Fanbd1/FM2 device inserted/device present

Nonrecoverable 2008/07/23 23:14:19 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:14:20 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:14:20 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/07/23 23:14:21 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/07/23 23:14:22 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:14:23 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:14:23 Fanbd1/FM2 device inserted/device present

Nonrecoverable 2008/07/23 23:15:23 CPU 0 IERR (internal error) detected

Nonrecoverable 2008/07/23 23:17:57 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:17:58 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:17:59 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/07/23 23:18:00 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/07/23 23:18:00 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:18:01 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:18:02 Fanbd1/FM2 device inserted/device present

Information 2008/07/23 23:18:07 CPU 0 IERR (internal error) return to normal

Nonrecoverable 2008/07/23 23:19:17 CPU 0 IERR (internal error) detected

Nonrecoverable 2008/07/23 23:26:54 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:26:54 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:26:55 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/07/23 23:26:56 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/07/23 23:26:57 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:26:57 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:26:58 Fanbd1/FM2 device inserted/device present

Information 2008/07/23 23:27:04 CPU 0 IERR (internal error) return to normal

Nonrecoverable 2008/07/23 23:29:23 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:29:24 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:29:25 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/07/23 23:29:26 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/07/23 23:29:26 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:29:27 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:29:28 Fanbd1/FM2 device inserted/device present

Nonrecoverable 2008/07/23 23:35:06 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:35:07 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:35:08 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/07/23 23:35:08 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/07/23 23:35:09 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/07/23 23:35:10 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/07/23 23:35:11 Fanbd1/FM2 device inserted/device present

Critical 2008/07/23 23:38:08 DIMM_D2 has memory ECC error

Nonrecoverable 2008/07/23 23:38:16 CPU 0 IERR (internal error) detected

Nonrecoverable 2008/07/23 23:38:16 CPU 1 IERR (internal error) detected

Phonecall with SUN on 2008-09-10

Oliver Mundt (who had tried to contact me on Sept 8th) called me. The problem indeed seems to be a broken DIMM. They will send a replacement.

Restart with replacement DIMM on 2008-09-24

Two replacement DIMMs had been sent on Sep 15th, but I had been on holidays from 16th to 23rd. I built in the new DIMM in position D2 on Wed Sep 24th, and the ELOM Event Log did not show the DIMM/CPU related errors any more. The machine bootet correctly and I was able to install Linux, etc. Still need to do a burn-in test.

Node again crashes with same error on 2008-09-26 new case number: 38177232

The node ran for ok for a few hours, but crashed again with the same error type, as can be seen from the ELOM log:

ELOM log excerpt:

Show

Show  Hide

Hide

Nonrecoverable 2008/09/24 16:52:35 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/09/24 16:52:36 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/09/24 16:52:36 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/09/24 16:52:37 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/09/24 16:52:38 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/09/24 16:52:39 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/09/24 16:52:40 Fanbd1/FM2 device inserted/device present

Nonrecoverable 2008/09/24 17:09:29 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/09/24 17:09:30 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/09/24 17:09:30 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/09/24 17:09:31 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/09/24 17:09:32 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/09/24 17:09:33 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/09/24 17:09:34 Fanbd1/FM2 device inserted/device present

Nonrecoverable 2008/09/24 17:36:43 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/09/24 17:36:44 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/09/24 17:36:45 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/09/24 17:36:45 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/09/24 17:36:46 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/09/24 17:36:47 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/09/24 17:36:48 Fanbd1/FM2 device inserted/device present

Critical 2008/09/24 20:32:45 DIMM_B2 has memory ECC error

Nonrecoverable 2008/09/24 20:33:55 CPU 0 IERR (internal error) detected

Nonrecoverable 2008/09/24 20:33:56 CPU 1 IERR (internal error) detected

Nonrecoverable 2008/09/25 05:50:29 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/09/25 05:50:30 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/09/25 05:50:31 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/09/25 05:50:32 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/09/25 05:50:32 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/09/25 05:50:33 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/09/25 05:50:34 Fanbd1/FM2 device inserted/device present

Information 2008/09/25 05:50:40 CPU 0 IERR (internal error) return to normal

Information 2008/09/25 05:50:40 CPU 1 IERR (internal error) return to normal

Critical 2008/09/25 06:04:29 DIMM_B3 has memory ECC error

Critical 2008/09/25 06:04:29 DIMM_B2 has memory ECC error

Critical 2008/09/25 06:04:29 DIMM_B3 has memory ECC error

Nonrecoverable 2008/09/25 06:04:33 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/09/25 06:04:34 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/09/25 06:04:35 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/09/25 06:04:36 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/09/25 06:04:37 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/09/25 06:04:37 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/09/25 06:04:38 Fanbd1/FM2 device inserted/device present

Nonrecoverable 2008/09/25 06:07:53 CPU 0 IERR (internal error) detected

Nonrecoverable 2008/09/25 06:07:53 CPU 1 IERR (internal error) detected

I notified our contact for this particular problem case (Oliver Mundt) in the evening of 2008-09-26 by email.

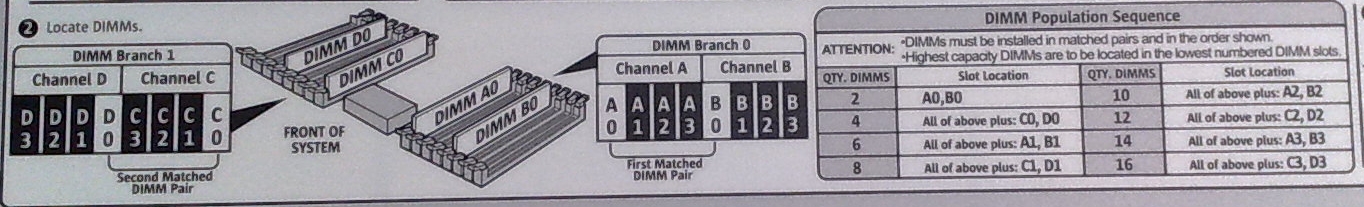

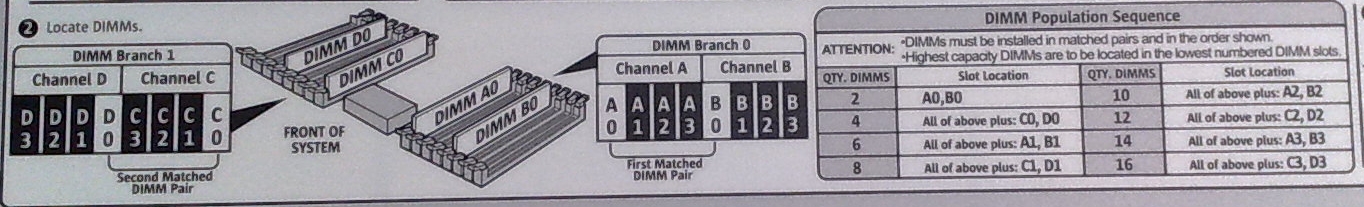

DIMMs need to be replaced in pairs, so I should have put the second DIMM into the matching slot for D2. This is mentioned on the inside cover of the machine:

Replaced matching DIMM on 2008-10-01

I replaced the C2 DIMM (matching DIMM of D2) and restarted the machine, as discussed with Oliver Mundt. We will see whether the Errors of the BX DIMMs from the 2008-09-26 entry above were related to the not having replaced the matching DIMM.

The machine started ok (around 12:30h) and for now seems to be working fine.

2008-10-15: The machine has been running stable. But we did not yet do a regular burn-in test.

CPU failure on t3wn08, new case number 38197361 on 2008-10-22

- Host Serial number: 0822QBR008

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 1 (system down).

- Case number: 38197361

- SUN technician: Oliver Mundt

ELOM log excerpt:

Show

Show  Hide

Hide

Nonrecoverable 2008/09/26 22:49:48 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/09/26 22:49:49 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/09/26 22:49:49 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/09/26 22:49:50 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/09/26 22:49:51 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/09/26 22:49:52 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/09/26 22:49:53 Fanbd1/FM2 device inserted/device present

Information 2008/09/26 22:49:59 CPU 0 IERR (internal error) return to normal

Information 2008/09/26 22:49:59 CPU 1 IERR (internal error) return to normal

Nonrecoverable 2008/09/27 02:00:04 CPU 0 IERR (internal error) detected

Nonrecoverable 2008/09/27 02:00:04 CPU 1 IERR (internal error) detected

Warning 2008/10/01 15:12:42 Power Supply 0 input out-of-range.but present assert

Nonrecoverable 2008/10/01 18:23:44 Fanbd0/FM0 device inserted/device present

Nonrecoverable 2008/10/01 18:23:45 Fanbd0/FM1 device inserted/device present

Nonrecoverable 2008/10/01 18:23:45 Fanbd0/FM2 device inserted/device present

Nonrecoverable 2008/10/01 18:23:46 Fanbd0/FM3 device inserted/device present

Nonrecoverable 2008/10/01 18:23:47 Fanbd1/FM0 device inserted/device present

Nonrecoverable 2008/10/01 18:23:48 Fanbd1/FM1 device inserted/device present

Nonrecoverable 2008/10/01 18:23:48 Fanbd1/FM2 device inserted/device present

Nonrecoverable 2008/10/21 05:37:39 CPU 0 IERR (internal error) detected

Nonrecoverable 2008/10/21 05:37:39 CPU 1 IERR (internal error) detected

I informed SUN through their phone support on 2008-10-27.

Got a phone call from Oliver Mundt on 2008-10-28: Seems not to be a CPU problem, but rather a firmware/BIOS related issue. We will get information on how to update it.

BIOS/FW upgrade on t3wn08, 2008-10-30. DIMM problem

Following instructions in an email by O. Mundt, I updated BIOS/firmwate from ELOM 4.0.4.0.06 to ILOM 2.0.2.6 (

Sun Fire X4150 Tools and Drivers DVD Version 2.0). I

documented the upgrade process (restricted access).

The node came up fine, but after a few minutes I again see a memory related problem:

(But this is not yet the newest upgrade. I may still check the upgrade to

Sun Fire X4150 Tools and Drivers DVD Version 2.1.

ILOM log excerpt:

Show

Show  Hide

Hide

show /SP/logs/event/list

49 Thu Oct 30 22:11:47 2008 IPMI Log critical

ID = 15 : 09/16/1988 : 12:34:17 : Memory : BIOS : Uncorrectable ECC DIM

M pair 51:11

48 Thu Oct 30 21:41:19 2008 Audit Log minor

root : Close Session : object = /session/type : value = shell : success

47 Thu Oct 30 21:28:50 2008 IPMI Log critical

ID = 14 : pre-init timestamp : System Firmware Progress : BIOS : System

boot initiated

46 Thu Oct 30 21:28:06 2008 IPMI Log critical

ID = 13 : pre-init timestamp : System Firmware Progress : BIOS : Option

ROM initialization

45 Thu Oct 30 21:27:54 2008 IPMI Log critical

ID = 12 : pre-init timestamp : System Firmware Progress : BIOS : Video

initialization

44 Thu Oct 30 21:27:47 2008 Audit Log minor

KCS Command : Set Serial/Modem Mux : channel number = 0 : MUX setting get

MUX setting : success

...

Second BIOS/FW upgrade on t3wn08, 2008-10-30. Node dies. Unidentified problem.

I then upgraded the ILOM/BIOS using the

Sun Fire X4150 Tools and Drivers DVD Version 2.1.0 drivers.

SP Firmware Version 2.0.2.6

SP Firmware Build Number 36843

The machine went online on 2008-10-30 23:30 and stayed up for approximately one hour. Then ganglia marked it as dead. Status in the morning: Even though no service LED was blinking and the

system was marked as OK in the ILOM, the OS did not respond to pings and the console was dead.

There was no message in the event log which I could associate with the malfunction (note that the last reboot with the older firmware had resulted in yet another DIMM error as detailed in the previous section).

Again, I fired the machine up, and it stayed up for 20 minutes before failing in the same way with nothing useful in the event log.

A look at the temperatures (the table maps to the rack layout):

Show

Show  Hide

Hide

| nodes |

PS0/T_AMB |

PS1/T_AMB |

MB/T_AMB0 |

MB/T_AMB1 |

MB/T_AMB2 |

MB/T_AMB3 |

T/AMB |

| rmwn08 |

40.500 |

41.500 |

54.000 |

52.000 |

52.000 |

45.000 |

23.000 |

| rmwn07 |

42.500 |

45.500 |

53.000 |

51.000 |

52.000 |

45.000 |

23.000 |

| rmwn06 |

43.500 |

43.000 |

54.000 |

52.000 |

53.000 |

45.000 |

23.000 |

| rmwn05 |

45.000 |

44.000 |

56.000 |

55.000 |

53.000 |

46.000 |

24.000 |

| rmwn04 |

|

|

|

|

|

|

|

| rmwn03 |

44.500 |

45.000 |

56.000 |

53.000 |

53.000 |

46.000 |

24.000 |

| rmwn02 |

45.500 |

43.000 |

55.000 |

53.000 |

53.000 |

45.000 |

24.000 |

| rmwn01 |

45.000 |

43.000 |

53.000 |

51.000 |

50.000 |

44.000 |

24.000 |

| rmnfs01 |

42.000 |

42.000 |

42.000 |

40.000 |

39.000 |

39.000 |

23.000 |

| rmdcachedb01 |

40.500 |

40.000 |

40.000 |

39.000 |

37.000 |

36.000 |

22.000 |

| rmse01 |

43.500 |

42.000 |

38.000 |

36.000 |

35.000 |

32.000 |

20.000 |

| rmui01 |

42.500 |

42.500 |

38.000 |

36.000 |

35.000 |

31.000 |

19.000 |

| rmce01 |

41.000 |

41.000 |

39.000 |

37.000 |

36.000 |

31.000 |

19.000 |

rmwn04 with the old ELOM still shows values as I was used to before the update:

- CPU 0 Temp = 27 degrees

- CPU 1 Temp = 28

- Ambient Temp0 = 24

typical readout for the two thumpers at the bottom of the rack:

Following a phone call from O. Mundt on 2008-11-06, I restarted the system to again try and catch error events in the ILOM log: One thing which I note:

- While the time given by the ILOM is ok while the SYS is down, it jumps to a wrong value once the machine is up (to ca +6h) . The SL4 Linux on this machine is configured exactly as on all others, and I do not understand why here ntpd is behaving differently.

- I reset the time using manual ntpdate to out time server.

The machine failed again after about 30 min of running normally. The ILOM event log does not give me much information. The ILOM shows the system as being still in "on" state. But the machine does not react to pings, etc. The SP console also does not show anything.

ILOM log excerpt:

Show

Show  Hide

Hide

show /SP/logs/event/list

189 Thu Nov 6 19:25:03 2008 Audit Log minor

root : Close Session : session ID = 3930019637 : success

188 Thu Nov 6 19:24:54 2008 Audit Log minor

root : Set Session Privilege Level: privilege level = admin : success

187 Thu Nov 6 19:24:54 2008 Audit Log minor

username = N/A : RAKP Message 3 : session ID = 3930019637 : success

186 Thu Nov 6 19:24:54 2008 Audit Log minor

root : RAKP Message 1 : session ID = 3930019637 : success

185 Thu Nov 6 19:24:54 2008 Audit Log minor

username = N/A : RMCP+ Open Session Request : role = unspecified : succes

s

184 Thu Nov 6 19:09:23 2008 Audit Log minor

root : Close Session : object = /session/type : value = shell : success

183 Thu Nov 6 18:29:52 2008 IPMI Log critical

ID = 40 : pre-init timestamp : System Firmware Progress : BIOS : System

boot initiated

182 Thu Nov 6 18:29:09 2008 IPMI Log critical

ID = 3f : 04/25/2032 : 22:08:09 : System Firmware Progress : BIOS : Opt

ion ROM initialization

181 Thu Nov 6 18:28:57 2008 IPMI Log critical

ID = 3e : 12/08/2025 : 18:04:57 : System Firmware Progress : BIOS : Vid

eo initialization

180 Thu Nov 6 18:28:50 2008 Audit Log minor

KCS Command : Set Serial/Modem Mux : channel number = 0 : MUX setting get

MUX setting : success

179 Thu Nov 6 18:28:50 2008 Audit Log minor

KCS Command : Set SEL Time : time value = 0x49133762 : success

178 Thu Nov 6 11:32:01 2008 IPMI Log critical

ID = 3d : pre-init timestamp : System Firmware Progress : BIOS : Second

ary CPU Initialization

177 Thu Nov 6 11:32:01 2008 IPMI Log critical

ID = 3c : pre-init timestamp : System Firmware Progress : BIOS : Primar

y CPU initialization

176 Thu Nov 6 11:32:00 2008 IPMI Log critical

ID = 3b : pre-init timestamp : System Boot Initiated : BIOS : Initiated

by hard reset

175 Thu Nov 6 11:32:00 2008 Audit Log minor

KCS Command : Set Watchdog Timer : timer user = 0x2 : timer actions = 0x0

: pre-timeout interval = 0 : expiration flags = 0x0 : initial count down

value = 65535 : success

174 Thu Nov 6 11:31:50 2008 Audit Log minor

root : Open Session : object = /session/type : value = shell : success

173 Thu Nov 6 11:31:31 2008 IPMI Log critical

ID = 3a : pre-init timestamp : System ACPI Power State : ACPI : S0/G0:

working

Trying to obtain diagnostic information with linux-explorer 2008-11-11

Following instructions from O. Mundt I downloaded a copy of the Linux Explorer from

here

.

The running of the explorer fails due to a Segmentation fault in a HAL library (hardware abstraction layer) that requires a nonexistent service. Regrettably this kills the whole run of the batch scripts without providing the information on the successful tests.

Since the machine continually dies after a few minutes of running time, one needs quite an effort to catch it in a stable phase.

bash linux-explorer.sh

lshal version 0.4.2

libhal.c 767 : org.freedesktop.DBus.Error.ServiceDoesNotExist raised

"Service "org.freedesktop.Hal" does not exist"

linux-explorer.sh: line 971: 17516 Segmentation fault $DUMPE2FS $parts >${LOGDIR}/disks/dumpe2fs${name}.out 2>&1

linux-explorer.sh: line 971: 17520 Segmentation fault $DUMPE2FS $parts >${LOGDIR}/disks/dumpe2fs${name}.out 2>&1

linux-explorer.sh: line 971: 17532 Segmentation fault $DUMPE2FS $parts >${LOGDIR}/disks/dumpe2fs${name}.out 2>&1

linux-explorer.sh: line 971: 17536 Segmentation fault $DUMPE2FS $parts >${LOGDIR}/disks/dumpe2fs${name}.out 2>&1

linux-explorer.sh: line 971: 17540 Segmentation fault $DUMPE2FS $parts >${LOGDIR}/disks/dumpe2fs${name}.out 2>&1

This error is due to a buggy RPM of the

dumpe2fs utility. An update to

e2fsprogs-1.35-12.17.el4.x86_64.rpm fixed this issue. The

linux-explorer.sh writes to = /opt/LINUXexplo= (which regrettably is never anounced) and produces a directory structure with an associated tar file. The tarfile from the t3wn08 node (with rmwn08 management interface) can be downloaded here:

http://t3mon.psi.ch/exchange/t3wn08-2008.11.12.11.19.14.tar.gz

Mailed to O. Mundt about the availability of the explorer results on 2008-11-12.

Mainboard exchange on 2008-11-14

The mainboard of the machine was replaced by a service company. No problems were visible after this.

DIMM error on t3wn04

- Host Serial number: 0822QBR00B

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 38197366

- SUN technician: Oliver Mundt

This node had passed fine the "Immediate Burn-in Testing option" offered by the ELOM interface in August. I tried to connect Oliver Mundt by email.

The ELOM log shows a DIMM single-bit error: ELOM log excerpt:

Show

Show  Hide

Hide

Critical 2008/10/06 04:41:59 DIMM_A2 has single-bit error

Critical 2008/10/06 08:17:47 DIMM_A3 has single-bit error

Critical 2008/10/06 11:53:36 DIMM_A3 has single-bit error

Critical 2008/10/06 15:29:24 DIMM_A3 has single-bit error

Critical 2008/10/06 19:05:13 DIMM_A3 has single-bit error

Critical 2008/10/07 05:52:38 DIMM_A3 has single-bit error

Critical 2008/10/07 09:28:27 DIMM_A2 has single-bit error

Critical 2008/10/07 13:04:16 DIMM_A2 has single-bit error

Critical 2008/10/07 16:40:04 DIMM_A3 has single-bit error

Critical 2008/10/07 20:15:53 DIMM_A3 has single-bit error

Critical 2008/10/07 23:51:41 DIMM_A3 has single-bit error

Critical 2008/10/08 03:27:30 DIMM_A3 has single-bit error

Critical 2008/10/08 07:03:18 DIMM_A3 has single-bit error

Critical 2008/10/08 10:39:07 DIMM_A2 has single-bit error

Critical 2008/10/08 14:14:55 DIMM_A2 has single-bit error

Critical 2008/10/08 17:50:44 DIMM_A2 has single-bit error

Critical 2008/10/08 21:26:33 DIMM_A3 has single-bit error

Critical 2008/10/09 01:02:21 DIMM_A2 has single-bit error

Critical 2008/10/09 04:38:10 DIMM_A2 has single-bit error

Critical 2008/10/09 11:49:47 DIMM_A3 has single-bit error

Critical 2008/10/10 02:13:02 DIMM_A2 has single-bit error

Critical 2008/10/10 09:24:39 DIMM_A2 has single-bit error

Critical 2008/10/10 13:00:28 DIMM_A3 has single-bit error

Critical 2008/10/10 16:36:17 DIMM_A2 has single-bit error

Critical 2008/10/10 20:12:05 DIMM_A2 has single-bit error

Critical 2008/10/10 23:47:54 DIMM_A2 has single-bit error

Critical 2008/10/11 03:23:42 DIMM_A3 has single-bit error

Critical 2008/10/11 06:59:31 DIMM_A3 has single-bit error

Critical 2008/10/11 10:35:19 DIMM_A2 has single-bit error

Critical 2008/10/11 14:11:08 DIMM_A3 has single-bit error

Critical 2008/10/11 17:46:56 DIMM_A3 has single-bit error

Critical 2008/10/11 21:22:45 DIMM_A2 has single-bit error

Critical 2008/10/12 00:58:34 DIMM_A3 has single-bit error

Critical 2008/10/12 04:34:22 DIMM_A2 has single-bit error

Critical 2008/10/12 08:10:11 DIMM_A3 has single-bit error

Critical 2008/10/12 11:45:59 DIMM_A3 has single-bit error

Critical 2008/10/12 15:21:48 DIMM_A2 has single-bit error

Critical 2008/10/12 18:57:36 DIMM_A2 has single-bit error

Critical 2008/10/12 22:33:25 DIMM_A3 has single-bit error

Critical 2008/10/13 02:09:13 DIMM_A2 has single-bit error

Critical 2008/10/13 05:45:02 DIMM_A2 has single-bit error

Critical 2008/10/13 09:20:51 DIMM_A2 has single-bit error

Critical 2008/10/13 12:56:39 DIMM_A2 has single-bit error

Critical 2008/10/13 16:32:28 DIMM_A3 has single-bit error

Critical 2008/10/13 20:08:16 DIMM_A2 has single-bit error

Critical 2008/10/13 23:44:05 DIMM_A3 has single-bit error

Critical 2008/10/14 03:19:53 DIMM_A2 has single-bit error

Critical 2008/10/14 06:55:42 DIMM_A2 has single-bit error

Critical 2008/10/14 10:31:30 DIMM_A2 has single-bit error

Critical 2008/10/14 14:07:19 DIMM_A3 has single-bit error

Critical 2008/10/14 17:43:08 DIMM_A2 has single-bit error

Critical 2008/10/14 21:18:56 DIMM_A2 has single-bit error

Critical 2008/10/15 00:54:45 DIMM_A3 has single-bit error

Critical 2008/10/15 04:30:33 DIMM_A3 has single-bit error

Critical 2008/10/15 08:06:22 DIMM_A2 has single-bit error

Critical 2008/10/15 11:42:10 DIMM_A2 has single-bit error

I've had no reply up to today (Oct 22nd), so I try again going via the phone connection.

The CPU temperature on the system is given as 34C on CPU0 and 42C on CPU1. Ambient temperature given as 24C.

Got a phone call from Oliver Mundt on 2008-10-28: Will receive 4 replacement DIMMs.

Replaced two of t3wn04's DIMMs on 2008-11-04

I only received 2 DIMMs instead of 4 in the packet from SUN. Since the DIMMs were of the same type, manufacturer, etc. as the existing ones, I chose to replace A2, A3 (whereas the matching DIMM pairings would have been A2,B2 and A3,B3).

This node has still the ELOM on it and it shows the following Temperatures a few minutes after booting up (no load, two measurements taken at ~ 10 min interval):

| CPU 0 Temp |

31.0 |

28 |

| CPU 1 Temp |

30.0 |

29.0 |

| Ambient Temp0 |

26.0 |

25.0 |

After the update to ILOM 2.0.2.6, BIOS 1ADQW052, I get (again, under no load)

The machine came up fine and showed no errors during the first two hours (no load, yet).

2008-11-06: The host is still running fine with no apparent problems. I will ask SUN to close this call.

2008-01-30 Machines lose IPMI connectivity

After some weeks of running, our X4150 machines are loosing the capability to communicate through IPMI. Calls like the following just timeout:

ipmitool -I lanplus -H rmwn05 -U root -f ipmi-pw chassis power status

Error: Unable to establish IPMI v2 / RMCP+ session

Unable to get Chassis Power Status

When one resets the SP through the ILOM (effectively rebooting the SP), IPMI works again:

reset /SP

Using the knowledge gained in another support case, I logged in to a number of our SUN X4150 machines using the sunservice account on the ILOM to access the underlying Linux.

The ILOM is the newest available for the X4150 as of this date:

SP firmware 2.0.2.6

SP firmware build number: 35128

SP firmware date: Mon Jul 28 10:17:35 PDT 2008

SP filesystem version: 0.1.16

Using normal process monitoring commands, I can see on all machines with failing IPMI communication that there is one particular process

semcleanup hogging most of the memory. This leads to other processes being killed because of memory shortage.

Output of

top command:

Show

Show  Hide

Hide

Mem: 81964K used, 13156K free, 0K shrd, 0K buff, 27584K cached

Load average: 3.30, 3.21, 3.16 (State: S=sleeping R=running, W=waiting)

PID USER STATUS RSS PPID %CPU %MEM COMMAND

1548 root S 28M 1 0.0 30.6 semcleanup

938 root S 3376 1 0.0 3.5 ntpd

1122 root S 2728 1063 0.0 2.8 plathwsvcd

1000 root S 2728 1 0.0 2.8 plathwsvcd

1063 root S 2728 1000 0.0 2.8 plathwsvcd

1123 root S 2728 1063 0.0 2.8 plathwsvcd

1065 root S 2728 1063 0.0 2.8 plathwsvcd

1124 root S 2728 1063 0.0 2.8 plathwsvcd

1125 root S 2728 1063 0.0 2.8 plathwsvcd

1121 root S 2728 1063 0.0 2.8 plathwsvcd

1096 root S 2728 1063 0.0 2.8 plathwsvcd

1143 root S 2720 1 0.0 2.8 lumain

1219 root S 2720 1143 0.0 2.8 lumain

1220 root S 2720 1219 0.0 2.8 lumain

1251 root S 2720 1219 0.0 2.8 lumain

2167 root S 2596 2059 0.0 2.7 snmpd

2059 root S 2596 2002 0.0 2.7 snmpd

2169 root S 2596 2167 0.0 2.7 snmpd

15751 root S 2072 1 0.0 2.1 webgo

1758 root S 1908 1 0.0 2.0 stlistener

3760 root S 1872 1978 0.1 1.9 sshd

Output of

dmesg:

dmesg

...

HighMem: empty

Free swap: 0kB

30720 pages of RAM

621 free pages

6940 reserved pages

943 slab pages

4824 pages shared

0 pages swap cached

Out of Memory: Killed process 19406 (LAN).

Out of Memory: Killed process 19487 (LAN).

Out of Memory: Killed process 19488 (LAN).

Out of Memory: Killed process 19489 (LAN).

Looking for all killed processes in the system log:

Show

Show  Hide

Hide

grep Killed /var/log/messages*

Jan 9 05:18:01 localhost kernel: Out of Memory: Killed process 19626 (frutool).

Jan 9 05:18:02 localhost kernel: Out of Memory: Killed process 19492 (LAN).

Jan 9 05:18:02 localhost kernel: Out of Memory: Killed process 19594 (LAN).

Jan 9 05:18:02 localhost kernel: Out of Memory: Killed process 19595 (LAN).

Jan 9 05:18:02 localhost kernel: Out of Memory: Killed process 19596 (LAN).

Jan 9 05:18:41 localhost kernel: Out of Memory: Killed process 20095 (sh).

Jan 9 05:18:43 localhost kernel: Out of Memory: Killed process 20002 (LAN).

Jan 9 05:18:43 localhost kernel: Out of Memory: Killed process 20076 (LAN).

Jan 9 05:18:43 localhost kernel: Out of Memory: Killed process 20077 (LAN).

Jan 9 05:18:43 localhost kernel: Out of Memory: Killed process 20078 (LAN).

Jan 9 05:19:45 localhost kernel: Out of Memory: Killed process 20301 (frutool).

Jan 9 05:19:46 localhost kernel: Out of Memory: Killed process 20167 (LAN).

Jan 9 05:19:46 localhost kernel: Out of Memory: Killed process 20271 (LAN).

Jan 9 05:19:46 localhost kernel: Out of Memory: Killed process 20272 (LAN).

Jan 9 05:19:46 localhost kernel: Out of Memory: Killed process 20273 (LAN).

Jan 9 05:20:06 localhost kernel: Out of Memory: Killed process 20488 (frutool).

Jan 9 05:20:08 localhost kernel: Out of Memory: Killed process 20371 (LAN).

Jan 9 05:20:08 localhost kernel: Out of Memory: Killed process 20471 (LAN).

Jan 9 05:20:08 localhost kernel: Out of Memory: Killed process 20476 (LAN).

Jan 9 05:20:08 localhost kernel: Out of Memory: Killed process 20477 (LAN).

Jan 9 05:20:28 localhost kernel: Out of Memory: Killed process 20691 (frutool).

Jan 9 05:20:29 localhost kernel: Out of Memory: Killed process 20558 (LAN).

Jan 9 05:20:29 localhost kernel: Out of Memory: Killed process 20636 (LAN).

Jan 9 05:20:29 localhost kernel: Out of Memory: Killed process 20637 (LAN).

Jan 9 05:20:29 localhost kernel: Out of Memory: Killed process 20638 (LAN).

Jan 9 05:22:10 localhost kernel: Out of Memory: Killed process 20871 (frutool).

Jan 9 05:22:10 localhost kernel: Out of Memory: Killed process 20743 (LAN).

Jan 9 05:22:10 localhost kernel: Out of Memory: Killed process 20847 (LAN).

Jan 9 05:22:10 localhost kernel: Out of Memory: Killed process 20848 (LAN).

Jan 9 05:22:10 localhost kernel: Out of Memory: Killed process 20849 (LAN).

Jan 9 05:23:18 localhost kernel: Out of Memory: Killed process 21021 (frutool).

Jan 9 05:23:20 localhost kernel: Out of Memory: Killed process 20905 (dbgLevelUpdater).

Jan 9 05:24:33 localhost kernel: Out of Memory: Killed process 21245 (MsgHndlr).

Jan 9 05:24:34 localhost kernel: Out of Memory: Killed process 21108 (LAN).

Jan 9 05:24:34 localhost kernel: Out of Memory: Killed process 21207 (LAN).

Jan 9 05:24:34 localhost kernel: Out of Memory: Killed process 21208 (LAN).

Jan 9 05:24:34 localhost kernel: Out of Memory: Killed process 21209 (LAN).

Jan 9 05:37:46 localhost kernel: Out of Memory: Killed process 21439 (frutool).

Jan 9 05:37:51 localhost kernel: Out of Memory: Killed process 21329 (LAN).

Jan 9 05:37:51 localhost kernel: Out of Memory: Killed process 21430 (LAN).

Jan 9 05:37:51 localhost kernel: Out of Memory: Killed process 21431 (LAN).

Jan 9 05:37:51 localhost kernel: Out of Memory: Killed process 21432 (LAN).

Jan 9 05:38:08 localhost kernel: Out of Memory: Killed process 21519 (LAN).

Jan 9 05:38:08 localhost kernel: Out of Memory: Killed process 21617 (LAN).

Jan 9 05:38:08 localhost kernel: Out of Memory: Killed process 21618 (LAN).

Jan 9 05:38:08 localhost kernel: Out of Memory: Killed process 21621 (LAN).

Jan 9 05:38:27 localhost kernel: Out of Memory: Killed process 21763 (keep_alive.sh).

Jan 9 05:38:29 localhost kernel: Out of Memory: Killed process 21629 (dbgLevelUpdater).

Jan 9 05:38:46 localhost kernel: Out of Memory: Killed process 21907 (adviserd).

Jan 9 05:38:46 localhost kernel: Out of Memory: Killed process 21925 (adviserd).

Jan 9 05:38:46 localhost kernel: Out of Memory: Killed process 21926 (adviserd).

Jan 9 05:38:46 localhost kernel: Out of Memory: Killed process 21927 (adviserd).

Jan 9 05:38:46 localhost kernel: Out of Memory: Killed process 21928 (adviserd).

Jan 9 05:38:46 localhost kernel: Out of Memory: Killed process 21931 (adviserd).

Jan 9 05:38:46 localhost kernel: Out of Memory: Killed process 21932 (adviserd).

Jan 9 05:38:47 localhost kernel: Out of Memory: Killed process 21812 (dbgLevelUpdater).

Jan 9 05:39:08 localhost kernel: Out of Memory: Killed process 21998 (LAN).

Jan 9 05:39:08 localhost kernel: Out of Memory: Killed process 22075 (LAN).

Jan 9 05:39:08 localhost kernel: Out of Memory: Killed process 22076 (LAN).

Jan 9 05:39:08 localhost kernel: Out of Memory: Killed process 22077 (LAN).

Jan 9 05:39:28 localhost kernel: Out of Memory: Killed process 22268 (frutool).

Jan 9 05:39:32 localhost kernel: Out of Memory: Killed process 22165 (LAN).

Jan 9 05:39:32 localhost kernel: Out of Memory: Killed process 22249 (LAN).

Jan 9 05:39:32 localhost kernel: Out of Memory: Killed process 22250 (LAN).

Jan 9 05:39:32 localhost kernel: Out of Memory: Killed process 22251 (LAN).

Jan 9 05:39:53 localhost kernel: Out of Memory: Killed process 22463 (frutool).

Jan 9 05:39:54 localhost kernel: Out of Memory: Killed process 22345 (LAN).

Jan 9 05:39:54 localhost kernel: Out of Memory: Killed process 22447 (LAN).

Jan 9 05:39:54 localhost kernel: Out of Memory: Killed process 22448 (LAN).

Jan 9 05:39:54 localhost kernel: Out of Memory: Killed process 22450 (LAN).

Jan 9 05:40:56 localhost kernel: Out of Memory: Killed process 22671 (frutool).

Jan 9 05:40:57 localhost kernel: Out of Memory: Killed process 22537 (LAN).

Jan 9 05:40:57 localhost kernel: Out of Memory: Killed process 22638 (LAN).

Jan 9 05:40:57 localhost kernel: Out of Memory: Killed process 22639 (LAN).

Jan 9 05:40:57 localhost kernel: Out of Memory: Killed process 22640 (LAN).

Jan 9 05:42:49 localhost kernel: Out of Memory: Killed process 22867 (sh).

Jan 9 05:42:50 localhost kernel: Out of Memory: Killed process 22728 (LAN).

Jan 9 05:42:50 localhost kernel: Out of Memory: Killed process 22828 (LAN).

Jan 9 05:42:50 localhost kernel: Out of Memory: Killed process 22829 (LAN).

Jan 9 05:42:50 localhost kernel: Out of Memory: Killed process 22830 (LAN).

Jan 9 05:43:11 localhost kernel: Out of Memory: Killed process 23052 (frutool).

Jan 9 05:43:15 localhost kernel: Out of Memory: Killed process 22937 (LAN).

Jan 9 05:43:15 localhost kernel: Out of Memory: Killed process 23025 (LAN).

Jan 9 05:43:15 localhost kernel: Out of Memory: Killed process 23044 (LAN).

Jan 9 05:43:15 localhost kernel: Out of Memory: Killed process 23045 (LAN).

Jan 9 05:43:35 localhost kernel: Out of Memory: Killed process 23155 (adviserd).

Jan 9 05:43:35 localhost kernel: Out of Memory: Killed process 23156 (adviserd).

Jan 9 05:43:35 localhost kernel: Out of Memory: Killed process 23161 (adviserd).

Jan 9 05:43:35 localhost kernel: Out of Memory: Killed process 23162 (adviserd).

Jan 9 05:43:35 localhost kernel: Out of Memory: Killed process 23166 (adviserd).

Jan 9 05:43:35 localhost kernel: Out of Memory: Killed process 23167 (adviserd).

Jan 9 05:43:35 localhost kernel: Out of Memory: Killed process 23168 (adviserd).

Jan 9 05:43:35 localhost kernel: Out of Memory: Killed process 23133 (dbgLevelUpdater).

Jan 9 05:43:57 localhost kernel: Out of Memory: Killed process 23320 (LAN).

Jan 9 05:43:57 localhost kernel: Out of Memory: Killed process 23393 (LAN).

Jan 9 05:43:57 localhost kernel: Out of Memory: Killed process 23394 (LAN).

Jan 9 05:43:57 localhost kernel: Out of Memory: Killed process 23395 (LAN).

Jan 9 05:44:16 localhost kernel: Out of Memory: Killed process 23464 (LAN).

Jan 9 05:44:16 localhost kernel: Out of Memory: Killed process 23538 (LAN).

Jan 9 05:44:16 localhost kernel: Out of Memory: Killed process 23539 (LAN).

Jan 9 05:44:16 localhost kernel: Out of Memory: Killed process 23540 (LAN).

Jan 9 05:44:38 localhost kernel: Out of Memory: Killed process 23622 (LAN).

Jan 9 05:44:38 localhost kernel: Out of Memory: Killed process 23722 (LAN).

Jan 9 05:44:38 localhost kernel: Out of Memory: Killed process 23723 (LAN).

Jan 9 05:44:38 localhost kernel: Out of Memory: Killed process 23724 (LAN).

Jan 9 05:45:38 localhost kernel: Out of Memory: Killed process 23908 (MsgHndlr).

Jan 9 05:46:18 localhost kernel: Out of Memory: Killed process 23806 (LAN).

Jan 9 05:46:18 localhost kernel: Out of Memory: Killed process 23882 (LAN).

Jan 9 05:46:18 localhost kernel: Out of Memory: Killed process 23883 (LAN).

Jan 9 05:46:18 localhost kernel: Out of Memory: Killed process 23884 (LAN).

So, a large number of processes gets killed. On machines reacting to IPMI calls, I can see a running

IPMIMain process, which is lacking on the machines with timeouts for IPMI calls.

Since all kinds of processes are killed, I can well imagine that this leads to other malfunctions in the ILOM.

additionally, the OK LED is turned off

- Node does not answer IPMI requests

- LED is shown as on in the SP:

show SYS/OK /SYS/OK Targets: Properties: type = Indicator value = On

-

semcleanup is using 28MB (30.3%) of the memory on the SP

- free memory on the SP:

[(flash)root@SUNSP001E684A1A31:/var/log]# free total used free shared buffers Mem: 95120 83324 11796 0 0 Swap: 0 0 0 Total: 95120 83324 11796

Resetting the SP cured the LED problem as well as the IPMI connectivity.

DIMM error on t3ui01 (case nr.71177414)

- Host Serial number: 0822QBR010

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 71177414

- SUN technician: -

Noticed a DIMM error which was anounced by the system's SERVICE LED when upgrading the firmware (please see

IlomUpgradeProblem#t3ui01 for more information). The faulty DIMM is in position A0 on the board (as anounced by /SYS/MB/MCH/DA0/SERVICE status)

2009-06-09 Replaced the DIMM with a spare DIMM from the original delivery

The faulty 2GB DIMM has SUN FRU PN: 371-3068-01 (series number 1A0 111900-3A FCT2814). I replaced it by a DIMM from the original cluster's delivery that I had not yet built in into the other machines. No more problems after starting the machine up again.

I reported the problem to SUN support on 2009-06-12 to get a replacement, and was contacted by Ferdinand Vykoukal from SUN.

2009-06-19 Replacment of DIMM pair with DIMMs delivered by SUN

We had received the spare parts in the late afternoon of 06-11, but due to travel and having to wait for a downtime, I was only able to replace the DIMMs today. I replaced the matching DIMMs in positions A0 and B0.

The new DIMMs from SUN have the following serial numbers:

SUN FRU 371-3068-01 Rev: 50, Hynix PC2-5300F-555-11, HYMP125F72CP8D3-Y5 AB-C 0906

SUN FRU 371-3068-01 Rev: 50, Hynix PC2-5300F-555-11, HYMP125F72CP8D3-Y5 AB-C 0906

2009-07-15 One PSU of thumper t3fs05 defect

Note: Only reported this problem in August (see below), when it appeared again.

- Host Serial number: 0805AMT050

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 71456574 (assigned on 2009-08-10)

- SUN technician: Thomas Henzen

Returned from holidays and saw that t3fs05 shows a yellow status LED for the REAR section. There, one of the PSUs is flagged yellow.

ILOM log excerpt:

8e00 | 05/26/2009 | 15:38:11 | System Firmware Progress | Motherboard initialization | Asserted

8f00 | 05/26/2009 | 15:38:12 | System Firmware Progress | Video initialization | Asserted

9000 | 05/26/2009 | 15:38:22 | System Firmware Progress | USB resource configuration | Asserted

9100 | 05/26/2009 | 15:38:51 | System Firmware Progress | Option ROM initialization | Asserted

9200 | 05/26/2009 | 15:39:49 | System Firmware Progress | System boot initiated | Asserted

9300 | 06/06/2009 | 20:52:00 | Voltage sys.v_+5v | Lower Non-critical going low | Reading 4.34 < Threshold 4.73 Volts

9400 | 06/06/2009 | 20:52:05 | Voltage sys.v_+5v | Lower Non-critical going high | Reading 4.97 > Threshold 4.73 Volts

9500 | 07/04/2009 | 00:24:51 | Power Supply ps1.pwrok | State Deasserted

9600 | 07/12/2009 | 22:05:18 | Voltage sys.v_+5v | Lower Non-critical going low | Reading 1.51 < Threshold 4.73 Volts

9700 | 07/12/2009 | 22:05:23 | Voltage sys.v_+5v | Lower Non-critical going high | Reading 4.97 > Threshold 4.73 Volts

9800 | 07/15/2009 | 13:46:06 | Power Supply ps1.vinok | State Deasserted

9900 | 07/15/2009 | 13:46:27 | Power Supply ps1.vinok | State Asserted

The failure must have happened on 07/04/2009 (

Power Supply #0x20 | State Deasserted). The messages 9800/9900 are due to my temporary removal of power from the flagged PSU.

Correction: Seems that the temporary removal did fix the problem. When I returned some time later, the yellow LED of the PSU was off and the green one was on.

2009-08-07 PSU LED again is yellow, but no error in event list

The PSU1 own internal LED was yellow, but the machine's main LEDs on the front panel were all green. The event log contained 5 new lines (all about the reboot, I did today), but nothing referring to a possible problem.

9800 | 07/15/2009 | 13:46:06 | Power Supply ps1.vinok | State Deasserted

9900 | 07/15/2009 | 13:46:27 | Power Supply ps1.vinok | State Asserted

9a00 | 08/07/2009 | 17:32:13 | System Firmware Progress | Motherboard initialization | Asserted

9b00 | 08/07/2009 | 17:32:14 | System Firmware Progress | Video initialization | Asserted

9c00 | 08/07/2009 | 17:32:24 | System Firmware Progress | USB resource configuration | Asserted

9d00 | 08/07/2009 | 17:32:54 | System Firmware Progress | Option ROM initialization | Asserted

9e00 | 08/07/2009 | 17:33:52 | System Firmware Progress | System boot initiated | Asserted

When I disconnected the power plug from PSU1, the front panel's REAR LED correctly showed yellow. I then reconnected the plug, the PSU LED went green for a short moment and then again turned yellow.

9f00 | 08/07/2009 | 18:34:25 | Power Supply ps1.vinok | State Deasserted

a000 | 08/07/2009 | 18:35:02 | Power Supply ps1.pwrok | State Asserted

a100 | 08/07/2009 | 18:35:06 | Power Supply ps1.vinok | State Asserted

a200 | 08/07/2009 | 18:35:07 | Power Supply ps1.pwrok | State Deasserted

I replaced the cable and connected it to a different power source. The PSU1 immediately showed a green LED, and it also stayed like this for about two minutes. But then again the yellow LED lighted up, and the deasserted status was logged. The PSU indeed seems to be defective.

a300 | 08/07/2009 | 18:41:17 | Power Supply ps1.vinok | State Deasserted

a400 | 08/07/2009 | 18:41:37 | Power Supply ps1.vinok | State Asserted

a500 | 08/07/2009 | 18:41:38 | Power Supply ps1.pwrok | State Asserted

a600 | 08/07/2009 | 18:43:31 | Power Supply ps1.pwrok | State Deasserted

2009-08-10 Called SUN support and reported the issue (Case number: 71456574)

I was asked for the output of a

prtdiag -v. Strange enough, the defective PSU does not appear in the output:

root@t3fs05 # prtdiag -v

System Configuration: Sun Microsystems Sun Fire X4500

BIOS Configuration: American Megatrends Inc. 080010 05/24/2007

BMC Configuration: IPMI 2.0 (KCS: Keyboard Controller Style)

==== Processor Sockets ====================================

Version Location Tag

-------------------------------- --------------------------

Dual Core AMD Opteron(tm) Processor 290 H0

Dual Core AMD Opteron(tm) Processor 290 H1

==== Memory Device Sockets ================================

Type Status Set Device Locator Bank Locator

------- ------ --- ------------------- --------------------

DDR in use 0 H0_DIMM0 BANK0

DDR in use 0 H0_DIMM1 BANK1

DDR in use 0 H0_DIMM2 BANK2

DDR in use 0 H0_DIMM3 BANK3

DDR in use 0 H1_DIMM0 BANK4

DDR in use 0 H1_DIMM1 BANK5

DDR in use 0 H1_DIMM2 BANK6

DDR in use 0 H1_DIMM3 BANK7

==== On-Board Devices =====================================

Marvell serial-ATA #1

Marvell serial-ATA #2

Marvell serial-ATA #3

Marvell serial-ATA #4

Marvell serial-ATA #5

Marvell serial-ATA #6

Intel 82546EB #1

Intel 82546EB #2

Intel 82551QM

==== Upgradeable Slots ====================================

ID Status Type Description

--- --------- ---------------- ----------------------------

0 in use PCI-X PCIX0

1 available PCI-X PCIX1

2009-08-12 Received replacement PSU - ok

Replaced the defective PSU while the system was running - worked nicely. PSU and front system LEDs immediately came up green upon reconnection of the power.

- New PSUs SN: 1357PHI-0739AE064P

- Returned PSUs SN: 1357PHI-0734AE03Q6

2010-09-30 Defect disks on two of the new Thors

Disks on t3fs07 and t3fs09 have failed. The spare disks have been activated (

RaidZ2 sets).

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 73622488

- broken disk type: SEAGATE ST31000N (SN: 0440KOR-094455RTRH, PN: 541-3730-01)

Replacement disk: SUNSN: 1308PRB-0951G528EF, PN: 540-7507-01 (Hitachi Ultrastar

HUA721010KLA330)

t3fs09:

- Host Serial number: 0949AMR064

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 73622520

- broken disk type: SEAGATE ST31000N (SN 0440KOR-094655Z2FT, PN: 541-3730-01)

Replacement disk: SN: 0440KOR-101857YHS7, PN: 541-3730-01

The steps taken in the repair of the systems are documented in

FileserverDiskProblems

2010-10-07 Defect DIMM on t3wn11 (blade center node)

- Host Serial number: 0949TF10J0 (blade center: 0950BD19C8)

- Sun Blade 6000 / X6270 server

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 73655594

Yellow LED was on. The SP logs show:

710 Wed Sep 29 09:02:01 2010 Fault Fault critical

Fault detected at time = Wed Sep 29 09:02:01 2010. The suspect component:

/SYS/MB/P0/D2 has fault.memory.intel.dimm.test-failed with probability=1

00. Refer to http://www.sun.com/msg/SPX86-8001-SA for details.

I reinserted the DIMM and started up the system again. Still faulted.

->show /SYS

...

product_name = SUN BLADE X6270 SERVER MODULE

product_part_number = 4517907-11

product_serial_number = 0949TF10J0

...

-> show /SYS/MB/P0/D2

/SYS/MB/P0/D2

Targets:

PRSNT

SERVICE

Properties:

type = DIMM

ipmi_name = P0/D2

fru_name = 4GB DDR3 SDRAM 666

fru_manufacturer = Samsung

fru_version = 00

fru_part_number = M393B5170EH1-CH9

fru_serial_number = 8512335D

fault_state = Faulted

clear_fault_action = (none)

- original part PN: 371-4288-01, Mfg TN: 0067APL-1018S4006C

- replacement part: PN: 371-4288-01, Mfg TN: 0067APL-1011S4000A

2010-10-19 Defect disk on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 1 (system unavailable).

- Case number: 73711768

- broken disk type: SEAGATE ST31000N (SN: , PN:)

- Oracle Contact Technician: Roland Krybus

A disks on t3fs07 seems to have failed without the automatic replacement by a spare. ZFS hangs. Details are

on this page

I notified ORACLE at 2010-10-19 10:15h and will receive a mail shortly. I will send a link to my detailed log on the twiki for their technicians. I was contacted by mail by Mr. Krybus and sent the information I had. He then telephoned me, confirmed that I had undertaken the correct steps and that he will propagate the problem to a specialist group.

Around 11:50h in addition I received the confirmation for the delivery of a replacement disk which should be here by 12 o'clock tomorrow.

Replacement disk arrived in time at noon of 2010-10-20.

- original part PN: 541-3730-01, SN: 0440KOR-094455R2HB

- replacement part: PN: 541-3730-01, SN: 0440KOR-091153C4MZ

- sent back as DHL 28 8003 6876

Replacement + Resilvering of disks went fine. Problem solved.

2010-11-12 Two defect disks on t3fs11

Called Oracle on 2010-11-15, 14:10h

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 73851356

- broken disk type: SEAGATE ST31000N 1TB disks

- hd33 (PN: 541-3730-01, SN: 0440KOR-094555P7HJ)

- hd40 (PN: 541-3730-01, SN: 0440KOR-094555RC85)

- Oracle Contact Technician: H. Diener

Both disks show fault.io.disk.predictive-failure. More details on

this link I got a confirmation from H. Diener that the replacement parts were sent on 2010-11-15.

- replacement part: PN: 541-3730-01, SN: 0440KOR-0947563RD1

- replacement part: PN: 541-3730-01, SN: 0440KOR-101657VBL6

old parts sent back via DHL 28 8003 7694 (to be picked up on 2010-11-19)

2010-11-22 Defective disk on t3fs10

Called Oracle on 2010-11-22, 16:20h

t3fs10:

- Host Serial number: 0949AMR021

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 73888096

- broken disk type: SEAGATE ST31000N 1TB disks

- hd23 (PN: 541-3730-01, SN: 094455QJ4T)

- Oracle Contact Technician: Nicole Meissner

This time I said that I do not need a technician to call back. They can just send the replacement. But I complained that of the new Thors many have had disk issues, now.

2010-11-23: Message from Oracle: They have difficulties with the fast delivery of the replacement disk:

wir haben Ihre Ersatzteil Anforderung erhalten. Das Ersatzteil 541-3730 wird voraussichtlich am 26.11.10 geliefert. Die Lieferung ist bei uns unter Service Request 73888096 und Requisition Nummer 3981978 geführt. 2010-11-30 received replacement

- replacement part: PN: 541-3730-02, SN: 0440KOR-091353K7VC

old parts sent back via DHL 28 8003 9400 (to be picked up on 2010-12-02). Everything ok.

2011-01-13 Defective disk on t3fs10

t3fs10:

- Host Serial number: 0949AMR021

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 3-2749336146

- broken disk type: SEAGATE ST31000N 1TB disks

- hd45 (PN: 541-3730-01, SN: 0440KOR-094455R4WX)

- Oracle Contact Technician:

The disk is flagged as defective by fmadm due to SMART values ("Reallocated sector count",

details here)

- replacement part: PN: 541-3730-02, SN: 0440KOR-091953ZFGL

old parts sent back via DHL 28 8044 7695 (to be picked up on 2011-01-25).

2010-01-13 Defective Service processor on t3ui03

t3ui03: (former t3wn05)

- Host Serial number: 0822QBR009

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 3-2749336141

- Oracle Contact Technician: Bruno Ammann

After powerdown SP does not come up again (cannot reach it through network). Backplane service LED is amber, location LED is blinking. The host itself is running ok.

5 minutes after placing the call, I already got contacted by Oracle support. Since the SP is onboard, the only thing that can be done is replacing the machine. Another supporter from Oracle dispatch will call for fixing a date.

The mainboard was replaced by Oracle support on 2011-01-14. Machine came up ok.

2011-01-26 Defect disk on t3fs11

Called Oracle on 2010-01-26, 15:25h they were busy Put the problem into the oracle web portal.

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 1 (system impaired).

- Case number: 3-2838722441

- broken disk type: SEAGATE ST31000N 1TB disks

- hd14 (PN: 541-3730-01, SN: 0440KOR-094555T450)

- Oracle Contact Technician:

- replacement part: PN: 541-3730-02, SN: 0440KOR-101657X1X9

Ordered DHL to pick the defective disk up by 2011-02-03. Waybill number 28 8044 7511.

2011-02-23 Defect disk on t3fs11

Called Oracle on 2011-02-24, 15:30h on the English line. A supporter will call back by tomorrow.

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2

- Case number: 3-3048582400

- broken disk type: SEAGATE ST31000N 1TB disks

- c4t3d0 (PN: 541-3730-01, SN: 0440KOR-094555XGFF)

- Oracle Contact Technician:

- replacement part: PN: 9CA158-145 ??? / SN: 9QJ3V7JS ???. We got the disk: 28-Feb-2011.

We did not get the global return tag and DHL waybill forms. Reported it and now are waiting for them, so we can send the broken disk back. On 2011-03-08 again were asked by DHL about the sending back... but we still had not received the new forms... But since we had a Oracle engineer on site, I decided to have him take the disk back personally on 2011-03-08.

2011-02-23 Defect DIMM on t3ui06

- Host Serial number: 0822QBR00C

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2

- Case number: 3-3073224711

- Oracle Contact Technician:

Problem Description: ID = 128 : 02/25/2011 : 17:00:19 : Memory : sensor number = 0x00 : Memory Device Disabled; Channel: A, DIMM: 0

We got the 2 replacement DIMMs on 2011-03-08 after they had been withheld for some time at the customs. We returned the defective DIMM by having the Oracle technician who visited on 2011-03-08 take them with him.

2010-03-07 Defective Service processor on t3ui05

t3ui05: (former t3wn02)

- Host Serial number: 0822QBR00D

- Site: PSI Tier-3

- Contact: Derek Feichtinger (cms-tier3@lists.psi.ch)

- Hardware problem of priority 2 (system impaired).

- Case number: 3-3125296581

- Oracle Contact Technician: Ivan Torretti

Called Oracle on 2011-03-07, 15:10h

After powerdown SP (ILOM) does not come up again (cannot reach it through network). Backplane service LED is amber, location LED is blinking. The host itself is running ok.

Ivan Torretti came on 2011-03-08 14h and exchanged the mainboard. Everything looks good, again.

2011-03-13 2 broken disks on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: 3-3171818131

- Oracle Contact Technician: ??

- I sent back the 2 disks on 18-03-2011

2011-03-18 1 broken disk on t3fs08

t3fs08:

- Host Serial number: 0949AMR066

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-3215687881

- Disk replaced with: Device Model: SEAGATE ST31000NSSUN1.0T 092154298B Serial Number: 9QJ4298B

2011-03-21 1 broken disk on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-3226173311

- Disk replaced with: Device Model: SEAGATE ST31000NSSUN1.0T 094355QKCV Serial Number: 9QJ5QKCV

2011-04-14 2 broken disk on t3fs08 ??

t3fs08:

- Host Serial number: 0949AMR066

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-3401062831

- Conclusion: the disks are still good.

2011-04-18 1 broken disk on t3fs11

Device: /dev/rdsk/c4t0d0, FAILED SMART self-check. BACK UP DATA NOW!

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3.

- Case number: SR 3-3424740481

- Broken disk: SN 0440KOR-094555TN3P , PN: 390-0414-04

- Oracle sent a new disk arrived 19-04-2011.

- DHL Ref. 17 8496 1555

2011-04-21 1 broken disk on t3fs11

c4t4d0 FAULTED 27 0 0 too many errors

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3.

- Case number: SR 3-3449280081

- Broken disk: SN 0440KOR-094555TMZM, PN 390-0414-04

- Oracle sent a new disk SN 0440KOR-091953ZFRY, PN 390-0414-05 on 27th-Apr, processed by PSI Store on 28th

- DHL Shipment Waybill 17 8496 2734

- DHL called Fabio Martinelli ( me ) on 2nd May to know about the boken disk, there I told them that today I'm filling their form to send back the broken disk and I cited the Waybill too.

2011-05-30 1 broken disk on t3fs08

t3fs08:

- Host Serial number: 0949AMR066

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-3721304961

- Broken disk: Sun PN: 390-0414-04 Sun SN: 0440KOR-094655T2BR

- Got new disk: Sun PN: 390-0414-05 Sun SN: 0440KOR-101657VM1J on 1st June 2011

- ORACLE DHL account #: 1549 37203

- DHL waybill #: 1784 9655 34

- Conclusion: got new disk.

2011-06-06 1 broken disk on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-3769748201

- Error message: ID = 20e : 06/06/2011 : 03:53:02 : Drive Slot : sensor number = 0x78 : Drive Fault

- Broken disk: Sun SN: 0440KOR-094455MWY5 Sun PN: 390-0414-04

- Got new disk: Sun SN: 0440KOR-0935553R0J Sun PN: 390-0414-05

- ORACLE DHL account #: 1549 37203

- DHL waybill #: 17 8496 4403

- Conclusion: got new disk.

2011-06-07 1 broken disk on t3fs08

t3fs08:

- Host Serial number: 0949AMR066

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-3778903571

- Error Message: Device: /dev/rdsk/c1t2d0, FAILED SMART self-check. BACK UP DATA NOW!

- Broken disk: Sun SN: 0440KOR-094655XXDD Sun PN: 390-0414-04

- Got new disk: Sun SN: 0440KOR-101857VZ96 Sun PN: 390-0414-05

- ORACLE DHL account #: 1549 37203

- DHL waybill #: 17 8496 4882

- Conclusion: got new disk

2011-07-04 1 broken disk on t3fs10

t3fs10:

- Host Serial number: 0949AMR021

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-3970982651

- Error Message: ZFS degraded.

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094455V7EC

- Got new disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-1004573A1F

- ORACLE DHL account #: 1549 37203

- DHL waybill #: 28 2355 3250

- Conclusion: got new disk.

2011-07-13 1 broken disk on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4041226881

- Error Message: ZFS degraded.

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094455N24F

- Got new disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-091953VKZ8

- ORACLE DHL account #: 1549 37203

- DHL waybill #: 28 2355 4414

- Conclusion: Got new disk

2011-07-16 1 broken disk on t3fs10

t3fs10:

- Host Serial number: 0949AMR021

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4064073817

- Error Message: ZFS degraded, disk /dev/rdsk/c1t0d0

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094455R6XW

- Got new disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-0943550JVH

- ORACLE DHL account #: 1549 37203

- DHL waybill #: 28 2355 4672

- Conclusion: got new disk

2011-08-19 1 broken disk on t3fs11

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4320210081

- Error Message: WARNING ZPOOL data1 : DEGRADED {Size:40.6T Used:31.5T Avail:9.10T Cap:77%} raidz2:DEGRADED (c3t3d0:REMOVED)

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094555K3S9

- Got new disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-094355QK9E

- ORACLE DHL account #: 1549 37203

- DHL waybill #: 28 2358 8235

- Conclusion: Got new disk

2011-08-24 1 broken disk on t3fs11

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4351957551

- Error Message: Device: /dev/rdsk/c2t1d0, not capable of SMART self-check

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094555RCC2

- Got new disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-094455RXNP

- ORACLE DHL account #: 1549 37203

- DHL waybill #: 28 2358 7491

- Conclusion: Got new disk.

2011-08-24 1 broken disk on t3fs11

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4361040501

- Error Message: Device: /dev/rdsk/c3t7d0, 1 Offline uncorrectable sectors

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094555RCFC

- Got new disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-093454YZAX

- ORACLE DHL account #: 0180 5 345300-1 or 70006102757230 ???

- DHL waybill #: (00) 9 4036501 681875321 5

- Conclusion: Got new disk

2011-08-28 1 broken disk on t3fs09

t3fs09:

- Host Serial number: 0949AMR064

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4385711041

- Error Message: Device: /dev/rdsk/c5t7d0, FAILED SMART self-check. BACK UP DATA NOW!

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094655YTXM

- Got new disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-094355QMCF

- ORACLE DHL account #: 1549 37203

- DHL waybill #: 28 2358 7686

- Conclusion: Got new disk

2011-09-23 1 broken disk on t3fs11

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4580938921

- Error Message: Device: Device: /dev/rdsk/c2t3d0, ATA error count increased from 0 to 6

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094455MHQX

- Got new disk: SUN PN: 390-0381-02 SUN SN: 1308PRB-0814G8H5MF

- ORACLE DHL account # 1549 37203

- DHL waybill #: 28 2359 3360

- Conclusion: got new disk

2011-09-26 1 broken disk on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4601367011

- Error Message: Device: /dev/rdsk/c4t2d0, ATA error count increased from 0 to 12

- Broken disk: SUN PN: SUN SN:

- Got new disk: SUN PN: SUN SN:

- ORACLE DHL account #

- DHL waybill #: 46 3218 4664

- Conclusion: got new disk

2011-09-27 1 broken disk on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4608903881

- Error Message: Device: /dev/rdsk/c1t4d0, 1 Offline uncorrectable sectors

- Broken disk: SUN PN: SUN SN:

- Got new disk: SUN PN: SUN SN:

- ORACLE DHL account #

- DHL waybill #: 46 3218 4664

- Conclusion: got new disk

2011-09-28 1 broken disk on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4617150077

- Error Message: Device: /dev/rdsk/c6t3d0, FAILED SMART self-check. BACK UP DATA NOW!

- Broken disk: SUN PN: SUN SN:

- Got new disk: SUN PN: SUN SN:

- ORACLE DHL account #

- DHL waybill #: 46 3218 4664

- Conclusion: got new disk

2011-09-29 1 broken disk on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4626598921

- Error Message: Device: /dev/rdsk/c4t4d0, ATA error count increased from 18 to 30

- Broken disk: SUN PN: SUN SN:

- Got new disk: SUN PN: SUN SN:

- ORACLE DHL account #

- DHL waybill #: 46 3218 4664

- Conclusion: got new disk

2011-10-04 1 broken disk on t3fs07

t3fs07:

- Host Serial number: 0949AMR020

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4662666781

- Error Message: Device: /dev/rdsk/c4t7d0, FAILED SMART self-check. BACK UP DATA NOW!

- Broken disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-1004573A1F

- Got new disk: SUN PN: 540-7507-01 SUN SN: 1308GSP-1047AKRVXL

- ORACLE DHL account # 154 937 203

- DHL waybill #: 17 8496 4532

- Conclusion: got new disk.

2011-10-18 1 broken disk on t3fs11

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4772633201

- Error Message: Device: /dev/rdsk/c5t3d0, ATA error count increased from 0 to 12

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094655Y928

- Got new disk: SUN PN: 540-7507-01 SUN SN: 1308GSP-1130APWZDL

- ORACLE DHL account # 1549 37203

- DHL waybill #: 28 2359 3124

- Conclusion: got new disk

2011-10-18 1 broken disk on t3fs09

t3fs09:

- Host Serial number: 0949AMR064

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4775943891

- Error Message: Device: /dev/rdsk/c3t0d0, FAILED SMART self-check. BACK UP DATA NOW!

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094555XDNH

- Got new disk: SUN PN: 390-0381-02 SUN SN: 1308PRB-0814G8BHSF

- ORACLE DHL account #:

- DHL waybill #: 28 2359 3135

- Conclusion: got new disk

2011-10-19 1 broken disk on t3fs11

t3fs11:

- Host Serial number: 0947AMR033

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4780597041: 1 broken disk - c5t2d0

- Error Message: Device: /dev/rdsk/c5t2d0, FAILED SMART self-check. BACK UP DATA NOW!

- Broken disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-091353HE9T

- Got new disk: SUN PN: 390-0381-02 SUN SN: 1308PRB-0821GUSJ4F

- ORACLE DHL account # 1549 37203

- DHL waybill #: 28 2359 2505

- Conclusion: got new disk

2011-11-07 1 broken disk on t3fs10

t3fs10:

- Host Serial number: 0949AMR021

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4913408721

- Error Message: Device: /dev/rdsk/c1t7d0 [SAT], 15 Offline uncorrectable sectors (changed +10)

- Broken disk: SUN PN: 390-0414-04 SUN SN: 0440KOR-094455PR05

- Got new disk: SUN PN: 540-7507-01 SUN SN: 1308GSP-1130AR7ZLL

- ORACLE DHL account # 154 937 203

- DHL waybill #: 28 2359 5924

- Conclusion: got new disk

2011-11-16 1 broken disk on t3fs09

t3fs09:

- Host Serial number: 0949AMR064

- Site: PSI Tier-3

- Contact: fabio.martinelli@psi.ch

- Hardware problem of priority 3

- Case number: SR 3-4934108461

- Error Message: Device: /dev/rdsk/c3t0d0 [SAT], Read SMART Self-Test Log Failed

- Broken disk: SUN PN: 390-0414-05 SUN SN: 0440KOR-1005575QMB

- Got new disk: SUN PN: 540-7507-01 SUN SN: 390-0479-02

- ORACLE DHL account # 154 937 203

- DHL waybill #: 28 2359 5913

- Conclusion: got new disk

2011-11-23 1 broken disk on t3fs08

t3fs08: