Phoenix Cluster

Specifications, standard Benchmark values

CPU

HS06 = HEP-SPEC06 benchmark value (see details in HEP-SPEC06 webpage| Nodes | Description | Processors | Cores/node | Total cores | HS06/core | Total HS06 | RAM/core |

|---|---|---|---|---|---|---|---|

| 64 | 2 * Intel Xeon E5-2670, 2.6 GHz | 2 | 32 | 2048 | 10.4 | 21299 | 2 GB |

| 1 | 2 * Intel Xeon E5-2690, 2.9 GHz | 2 | 32 | 32 | 11.2 | 358 | 2 GB |

| 48 | 2 * Intel Xeon E5-2680 v2 @ 2.8 GHz | 2 | 40 | 1920 | 11.1 | 21312 | 3.2 GB |

| 40 | 2 * Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 2 | 56 | 2240 | 12.01 | 26902 | 2 GB |

| Total | 6240 | 69871 |

| Nodes | Description | Processors | Cores/node | Total cores | HS06/core | Total HS06 | RAM/core |

|---|---|---|---|---|---|---|---|

| 25 | Xeon E5-2695 v4 @ 2.10GHz | 64 | 1600 | 12.96 | 20736 | 2 GB | |

| Total | 1600 | 20736 |

- ATLAS: 40% fairshare (max of 2000 jobs running, 4000 in queue).

- CMS: 40% fairshare (max of 2000 jobs running, 4000 in queue).

- LHCb: 20% fairshare (max of 1500 jobs running, 4000 in queue).

Storage infrastructure

Central storage - dCache

The dCache services are provided by 18 servers connected to the storage systems (details in the table below) Please note that the INSTALLED and PLEDGED capacity are equal.| Count | System | Disks | Size | Protection | Usable Space |

|---|---|---|---|---|---|

| 8 | IBM DCS3700 | 480 | 3TB | RAID6 | 1'046.4 TiB |

| 8 | NETAPP E5500 | 480 | 4TB | RAID6 | 1'396.8 TiB |

| 2 | NETAPP E5600 | 120 | 6TB | RAID6 | 429.0 TiB |

| 4 | DDN SFA12K | 240 | 3TB | RAID6 | 528.0 TiB |

| 1320 | 3'400.2 TiB |

Scratch - GPFS

The 'scratch' filesystem is mounted by the worker nodes and is provided by a GPFS (IBM Spectrum Scale) cluster of 8 nodes: 4 metdadata servers and 4 data servers. The metedata disks are local SSDs directly attached to the metadata servers.Data redundancy is granted by the GPFS failure group mechanism

Total metadata space is 1.75 TB The data disks are provided by a NETAPP E-Series system with 120x 900GB SAS 10K-RPM drives configured in RAID6

Total data space is 84.30 TB Last update: June 2016

Network connectivity

External network

CSCS has a 100 Gbit/s Internet connection (by SWITCH). The Phoenix cluster is connected to Internet via a switch that has a total of 80 Gbit/s throughput capability.Internal network

Based on Infiniband QDR/FDR, all nodes are connected to a Voltaire/Mellanox Fat-Tree topology network (blocking factor 5), that provides every node with 32 Gbit/s, and 192 Gbit/s bandwidth between the two farthest nodes. The new nodes (phase H) are connected to an Infiniband FDR fabric with uplinks to the Voltaire/Mellanox QDR network. Virtual machines (together with CSCS and internet access) are connected with Ethernet, and attached to the Infiniband Switch via two Voltaire E4036 transparent bridges, with a maximum capacity of 20 Gb/s in Active/Passive mode.Cluster Management Nodes

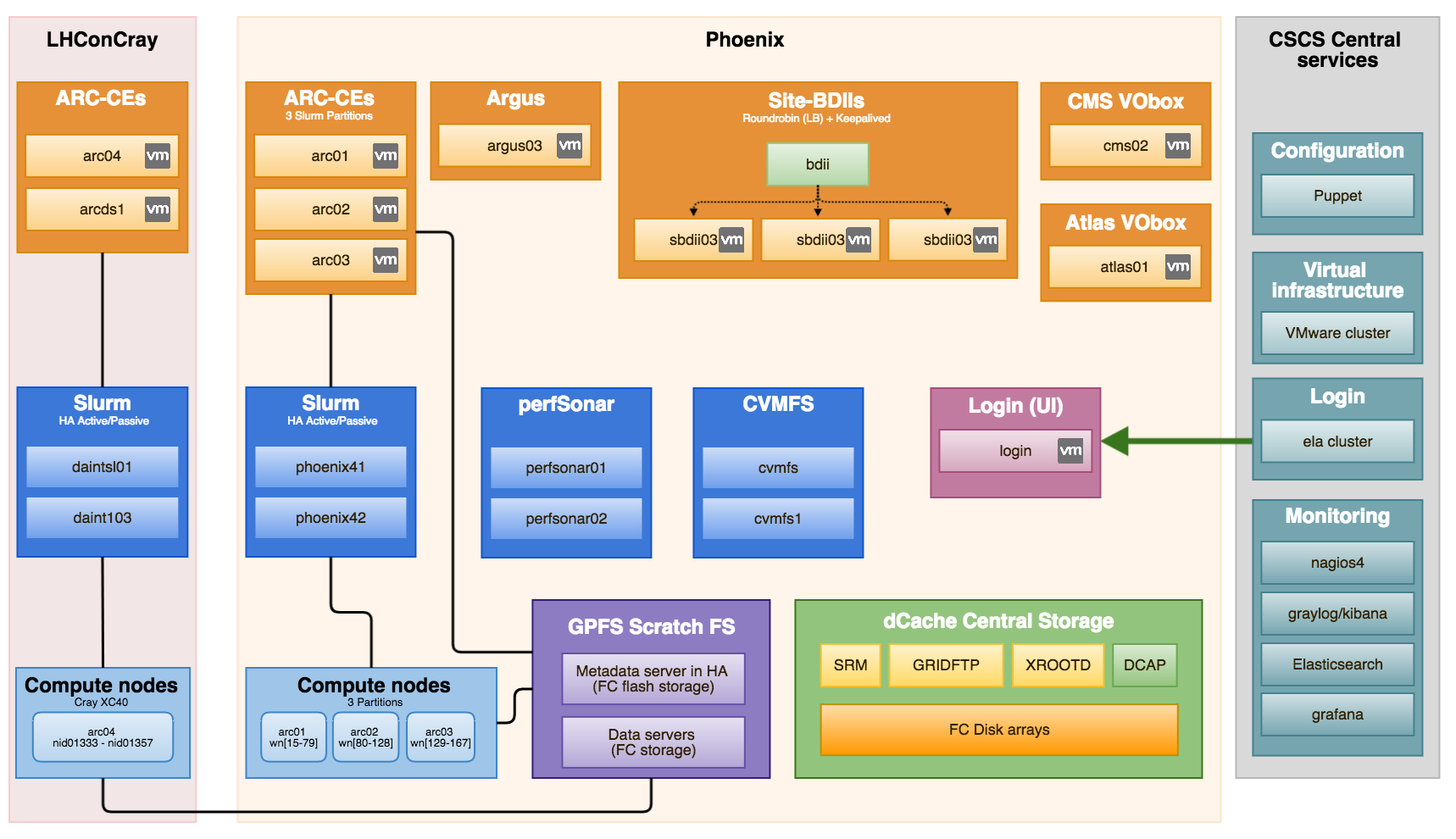

Most of the service nodes are virtualized. To see a complete list please visit the FabricInventory#Virtual_Machines The rest is not virtual. It's basically the WNs, dCache nodes (core and pools), scratch FS, NFS, and CernVMFS Individual services are described in ServiceInformationEMI early adopters status

At the moment, CSCS is an early adopter of the following components:- CREAM CE

- APEL

- WN

- SLURM

| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

Phoenix_LCG_Services_Nov_2013.png | r1 | manage | 302.7 K | 2013-11-17 - 23:08 | MiguelGila | |

| |

Phoenix_LCG_Services_Phase_D.jpg | r2 r1 | manage | 90.9 K | 2011-05-25 - 07:45 | PabloFernandez | |

| |

Phoenix_LCG_Services_Phase_E.jpg | r1 | manage | 79.8 K | 2011-12-09 - 12:58 | PabloFernandez | |

| |

Phoenix_LCG_Services_Phase_E_-_after_move.jpg | r1 | manage | 85.1 K | 2012-05-29 - 09:04 | PabloFernandez | |

| |

Phoenix_LCG_Services_Phase_F.jpg | r1 | manage | 85.2 K | 2012-08-21 - 08:13 | PabloFernandez | |

| |

Phoenix_LCG_Services_Phase_G.jpg | r1 | manage | 87.8 K | 2013-02-21 - 10:37 | PabloFernandez | |

| |

Phoenix_Training_session.pptx | r1 | manage | 45.7 K | 2011-05-30 - 13:35 | PabloFernandez | |

| |

phoenix_services.png | r1 | manage | 258.6 K | 2017-12-19 - 08:37 | DinoConciatore | Phoenix_LCG_Services_Nov_2017 |

| |

phoenix_services_notit.png | r1 | manage | 204.9 K | 2016-09-21 - 12:33 | DinoConciatore | Phoenix Services 2016 |

Topic revision: r50 - 2017-12-19 - DinoConciatore

Ideas, requests, problems regarding TWiki? Send feedback