<!-- keep this as a security measure:

Please note:  ) (Note: 1 HS06 = 1 GFlop, approximately)

Pledge compute capacity is 10% lower due to various inefficiencies.

) (Note: 1 HS06 = 1 GFlop, approximately)

Pledge compute capacity is 10% lower due to various inefficiencies.

Intel® Xeon E5-2695 v4 @ 2.10GHz

These CPU resources are divided among the VOs with fairshare (data taken from the last week of usage) and a few reserved slots (for exclusive usage):

Storage Elements are connected to the storage systems through the CSCS SAN Total space available on dCache is 4.6 PB and is distributed in 40% - 40% - 20% (ATLAS/CMS/LHCb) fashion. Last update: November 2019

Tier1: SSD based data pool (90TB) where all files are written

Tier2: HDD based data pool (380TB) where less frequently used data is moved (this mechanism is transparent for the clients) Last update: November 2019

- Set ALLOWTOPICCHANGE = TWikiAdminGroup,Main.LCGAdminGroup

- Set ALLOWTOPICRENAME = TWikiAdminGroup,Main.LCGAdminGroup #uncomment this if you want the page only be viewable by the internal people #* Set ALLOWTOPICVIEW = TWikiAdminGroup,Main.LCGAdminGroup

Specifications, standard Benchmark values

A summary of the resources CSCS is pledged to deliver in April 2021 as agreed with the CHIPP Computing Board:| Resource | Provided | Pledged 2021 | Notes |

|---|---|---|---|

| Computing (HS06) | 220000 | 198400 | ATLAS 74240 (37.42%), CMS 68480 (34.52%), LHCb 55680 (28.07%) |

| Storage (TB) | 6155 | 6155 | 2574 ATLAS, 2490 CMS, 1091 LHCb |

- They are calculated using projections from the RRB (resource review board) and agreed with SNF.

- Above resources differ from CRIC due to a misunderstanding. This was clarified with the WLCG office in November 2020.

- The extension of the foreseen storage capacity was not available until July'21 due to problems in the chip supply chain.

CPU

HS06 = HEP-SPEC06 benchmark value (see details in HEP-SPEC06 webpage| Nodes | Description | Processors | Cores/node | Total cores | HS06/core | Total HS06 | RAM/core |

|---|---|---|---|---|---|---|---|

| 234.4 | Xeon E5-2695 v4 @ 2.10GHz | 68 | 15937 | 12.45 | 198414.08 | 1.9 GB | |

| Total | 15937 | 198414.08 |

- ATLAS: 40% fairshare (max of 2000 jobs running, 4000 in queue).

- CMS: 40% fairshare (max of 2000 jobs running, 4000 in queue).

- LHCb: 20% fairshare (max of 1500 jobs running, 4000 in queue).

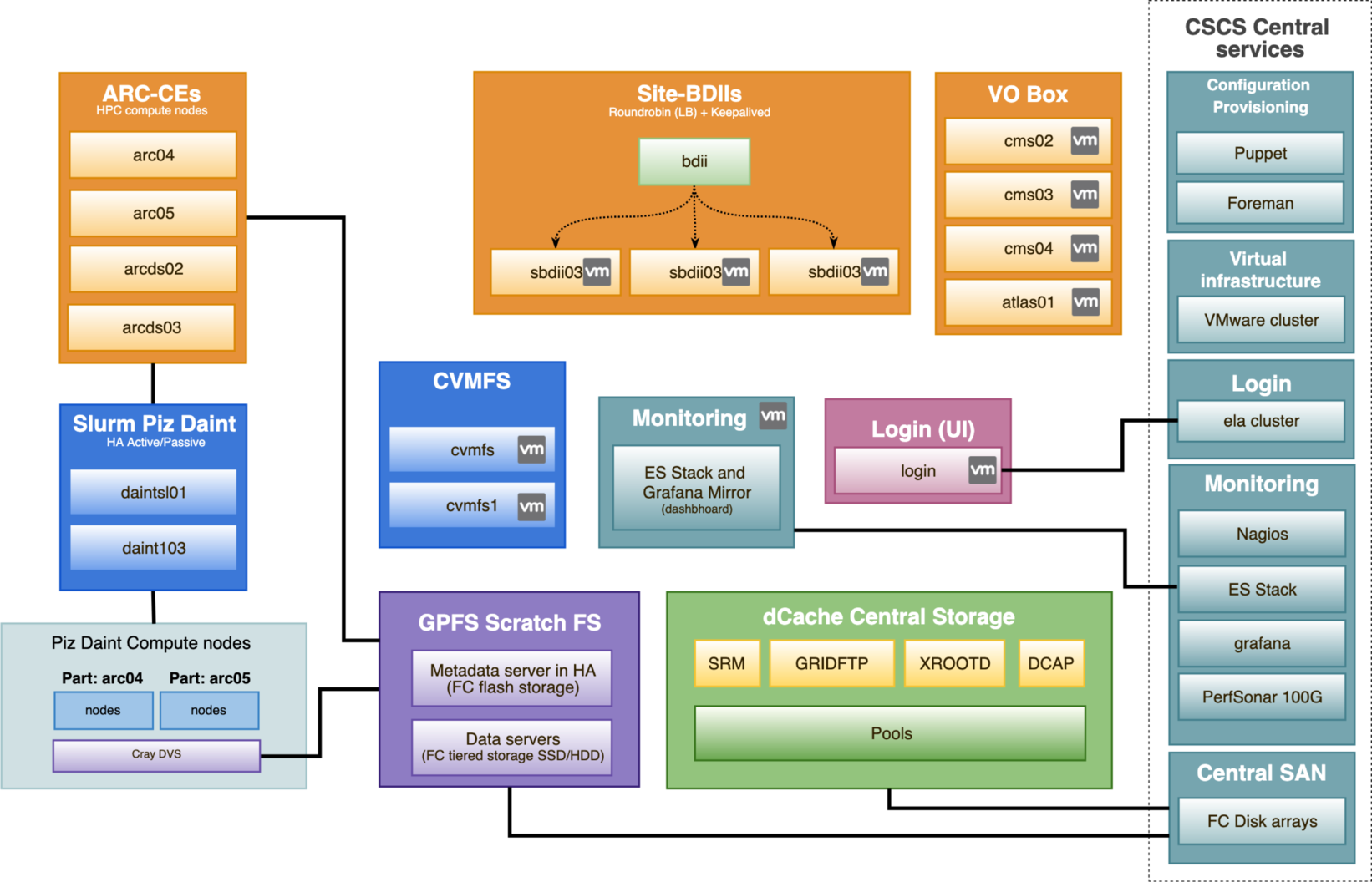

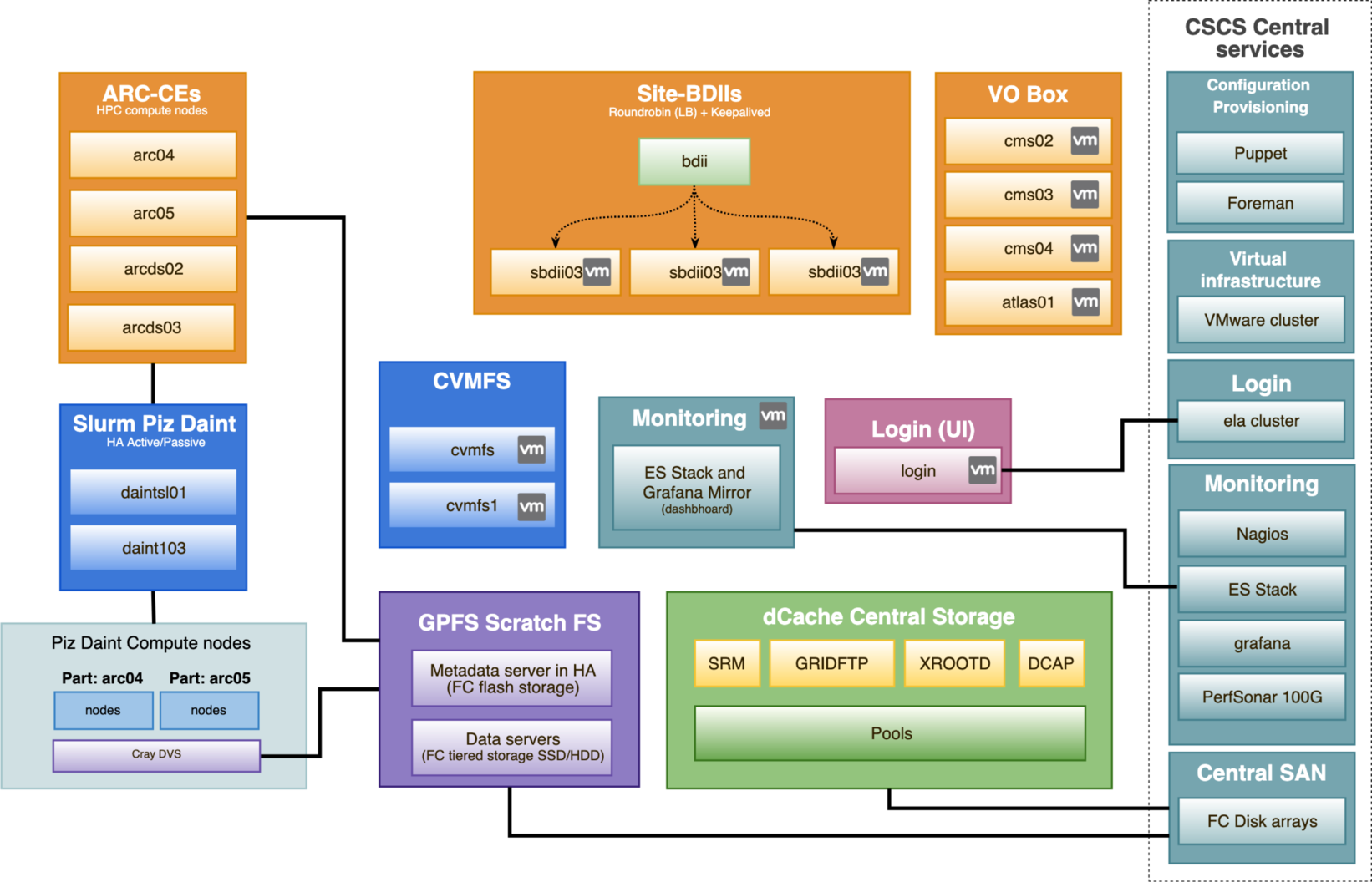

Storage infrastructure

Central Storage - dCache

The dCache services are provided by 12 servers (10 "Storage Elements" and 2 "Head Nodes")Storage Elements are connected to the storage systems through the CSCS SAN Total space available on dCache is 4.6 PB and is distributed in 40% - 40% - 20% (ATLAS/CMS/LHCb) fashion. Last update: November 2019

Scratch - Spectrum Scale

The 'scratch' filesystem is available to Compute nodes through special DVS nodes and is provided by a Spectrum Scale cluster of 16 nodes (4 meta + 12 data). Metedata is located on Flash Storage The filesystem is featuring a policy based pool tieringTier1: SSD based data pool (90TB) where all files are written

Tier2: HDD based data pool (380TB) where less frequently used data is moved (this mechanism is transparent for the clients) Last update: November 2019

Network connectivity

External network

CSCS has a redundant 100Gbit/s connection (provided by SWITCH). LHConCray computing resources, storage and all related services are connected to the Internet with many high speed interfaces.Cluster Management Nodes

Most of the service nodes are virtualized. To see a complete list please visit the FabricInventory#Virtual_Machines Individual services are described in ServiceInformationIdeas, requests, problems regarding TWiki? Send feedback