Activities Overview of 2013

Q1 & Q2

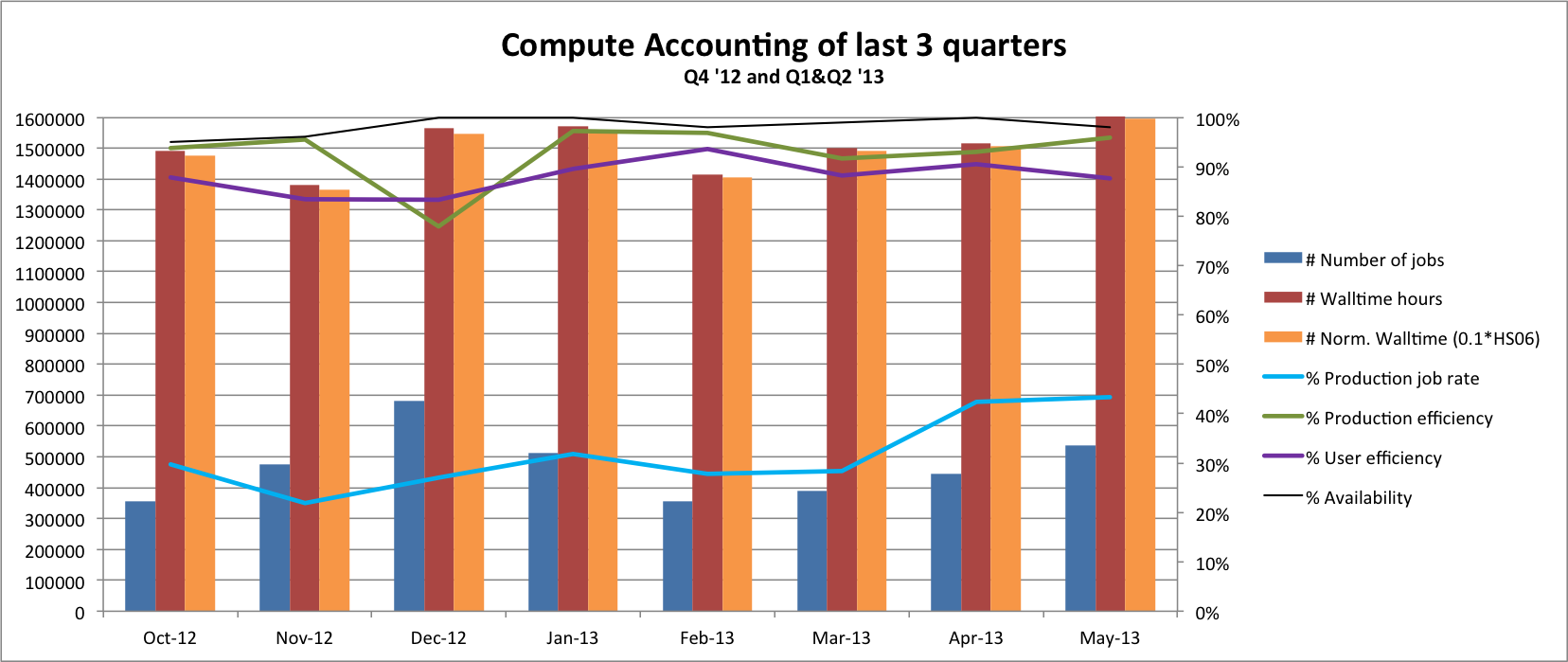

Almost one year after the move to a new datacenter and the main objective of this period has been to continue the stable operations while starting the replacement process of old Phase C systems. On average, during this period, the availability and reliability of Phoenix has been above 98% while the efficiency of user and production jobs has continued above an average of 95%. This is, in part, due to two very unique features of Phoenix: the high speed Infiniband network and the use of a shared "scratch" high speed filesystem where all jobs run.

- 44% ATLAS (share: 40%)

- 41% CMS (share: 40%)

- 14% LHCb (share: 20%)

For a detailed information of the system, see https://wiki.chipp.ch/twiki/bin/view/LCGTier2/PhoenixSetupAndSpecs

For a detailed information of the system, see https://wiki.chipp.ch/twiki/bin/view/LCGTier2/PhoenixSetupAndSpecs

Infrastructure changes

- Deployed 2 new virtualisation servers. These new IBM machines have state-of-the-art CPUs and SSDs to provide much better performance and stability compared to old machines. With just two physical servers we are capable of supporting 16 different virtual machines while still having enough room to grow.

- Deployed 5 new physical machines for high throughput services: CREAM-CE (2x), SE head-nodes (2x) and CVMFS Squid (1x).

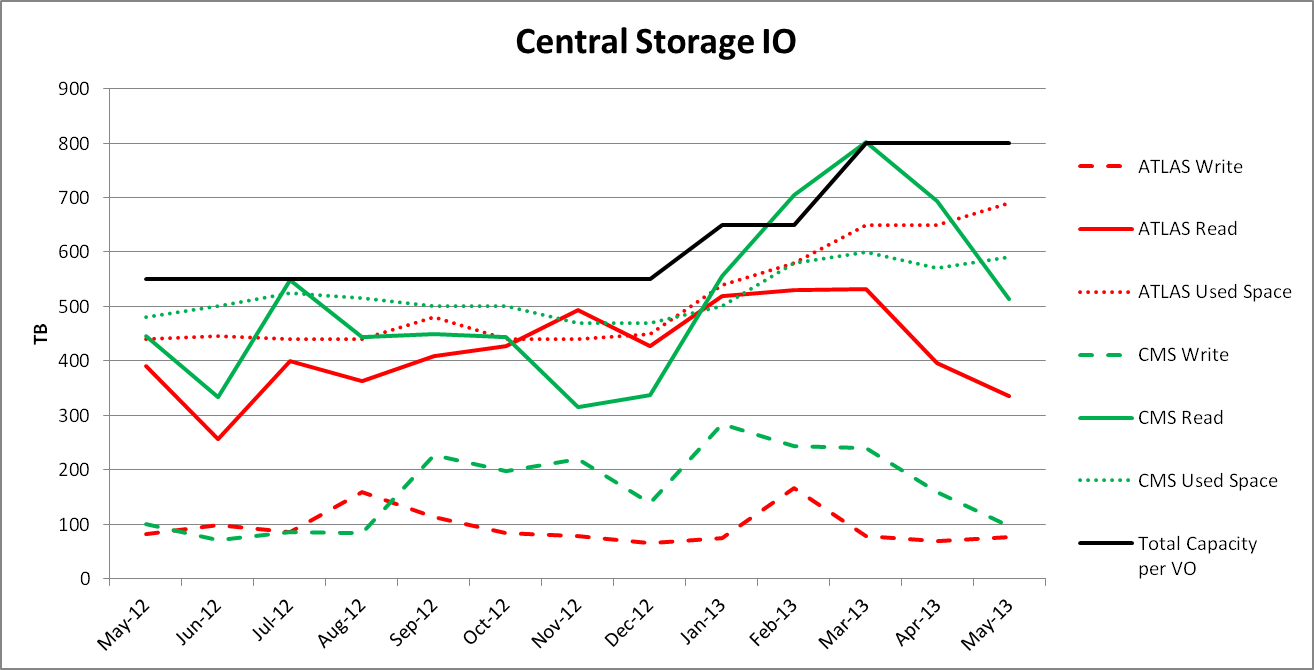

- Increased capacity of the Storage Element above the 1300TB pledged. This upgrade was intended to replace the temporary solution provided by CSCS back in August 2012 when the Phase C Sun Thors failed, and to meet the pledges of Phase G in March 2013. The storage capacity is distributed:

- 4 Blocks of IBM Storage: 4 x (10xDC3500 + 2x3650M3) for a total capacity of 764 TB.

- 2 Blocks of IBM Storage: 3 x (2xDCS3700 + 2x3650M4) for a total capacity of 558 TB

- 1 Block of IBM Storage: (2xDCS3700 + 2x3650M4) for a total capacity of 279 TB that is associated to the Storage Element, but not pledged in case the ageing scratch filesystem needs to be partially replaced.

- Installed the remaining compute nodes (WN) to a total capacity of 2464 job lots, providing more than 23 kHS06 (the last 8 systems were installed in Q1 2013). The computing power is distributed as follows:

- 10 AMD Interlagos systems with 2x AMD Opteron 6272 CPUs and 32 job slots per machine.

- 66 Intel Sandy Bridge systems with 2x Intel Xeon E-2670 CPU and 32 job slots per machine.

- 1 Intel Sandy Bridge systems with 2x Intel Xeon E-2690 CPU and 32 job slots per machine.

- Redistributed the WNs across the different racks to assure availability in case of power failure of one of the power supplies.

- Replaced old CMS and ATLAS VO boxes with new more powerful VMs.

Software changes

- Migration of most service nodes to Scientific Linux 6. Like the rest of the WLCG community, Phoenix is slowly adapting to the last compatible Scientific Linux release.

- Upgraded the Storage Element (SE) software to the latest dCache Golden Release (2.2) that will be supported until April 2014. As happened in 2012 with the upgrade from dCache 1.9.5 to 1.9.12, this was a major change in the software and extensive tests had to be performed in the preproduction environment.

- Moved the Infiniband software stack in all service nodes from vendor-specific proprietary software, to standard Linux kernel modules.

- Moved all WLCG-related services to the EMI common middleware release UMD-2. The services currently provided to the community are these:

- CREAM-CE and ARC-CE job submission services.

- dCache based Storage Element (SE) for permanent storage of experiment data.

- Load-balanced Site-BDII information system.

- CVMFS

- VO-boxes for both ATLAS and CMS.

- Load-balanced ARGUS (authorization and identification) service.

Other changes

- Deployed new log management system (logstash) for improved logging traceability.

- Redistributed some of old Sun Thors to UNI-BERN to improve their growing Tier-2 ATLAS infrastructure. Some decommissioned Sun hardware was also distributed to PSI as spare parts.

| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

Accounting_Storage.png | r2 r1 | manage | 71.4 K | 2013-06-11 - 15:44 | PabloFernandez |

Topic revision: r8 - 2013-06-11 - PabloFernandez

|

Warning: Can't find topic "".""

|

|

|

Ideas, requests, problems regarding TWiki? Send feedback