Hardware Card for IBM DS3500

Short description about the hardware.

Specifications

What's inside the hardware, probably depending on what we're talking about.

- Disk controller model. Linux driver needed?

- Network card model

- Space that takes in the rack (U's)

- Max CPUs, Max Disks, Max Mem slots.

- CDROM?

- Dual power supply?

- Front / Back picture (maybe available online?)

- Any other?

Power consumption (measured before and during the CPU/Disk tests)

Ambiental details

- External working temperature

- Normal CPU/memory internal temperature

- Air flow (cubic meters per hour)

- Noise (dB)

Operations

Interesting information like how to handle it, anything interesting which is not trivial.

How to get into the ILOM

Power up/down procedures

Commands to issue in an internal console

Firmware updates

Replacement of internal components

Installation notes

Instructions on how to set up a new machines that arrives, with things like:

BIOS settings

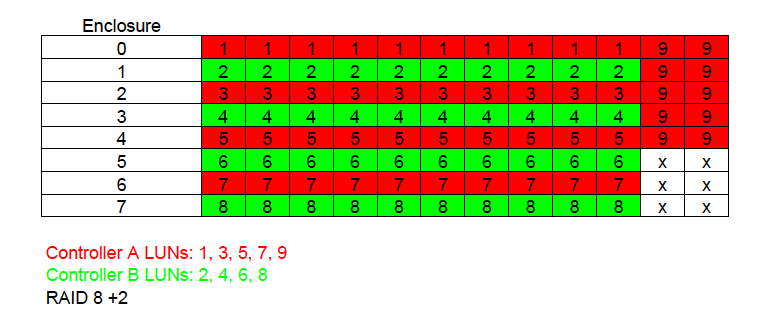

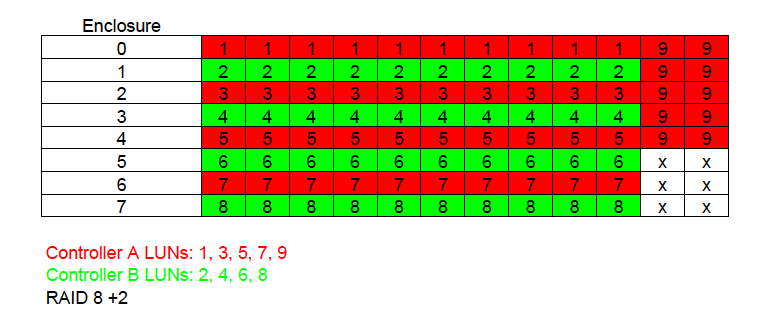

RAID configuration

RAID configuration used for dCache:

Drivers required / kernel compatibility

Check firmware homogeneity with other machines in the cluster

Benchmarks

Information about benchmarks performed in the machine.

Disk benchmarks

bonnie++

- RAID 8 + 2 128kb segment size

- xfs

- mkfs.xfs -b size=4096 -d su=128k,sw=8

- ext4

- mkfs.ext4 -E stride=32,stripe-width=256 -O sparse_super -b 4096 -m 0

| Version 1.96 |

|

Sequential Output |

Sequential Input |

Random |

| Concurrency |

1 |

Per Chr |

Block |

Rewrite |

Per Chr |

Block |

Seeks |

| Machine |

Size |

K/sec |

%CP |

K/sec |

%CP |

K/sec |

%CP |

K/sec |

%CP |

K/sec |

%CP |

/sec |

%CP |

| xfs |

100000M |

1673 |

98 |

258522 |

32 |

164738 |

23 |

3401 |

99 |

455836 |

29 |

518.8 |

13 |

| Latency |

4954us |

|

314ms |

|

231ms |

|

7143us |

|

177ms |

|

74101us |

|

| ext4 |

100000M |

927 |

98 |

256154 |

46 |

159501 |

19 |

3983 |

99 |

530509 |

35 |

400.6 |

34 |

| Latency |

9114us |

|

206ms |

|

599ms |

|

2388us |

|

150ms |

|

627ms |

|

| Version 1.96 |

|

Sequential Create |

Random Create |

| Concurrency |

1 |

Create |

Read |

Delete |

Create |

Read |

Delete |

| Machine |

files |

/sec |

%CP |

sec |

%CP |

/sec |

%CP |

/sec |

%CP |

/sec |

%CP |

/sec |

%CP |

| xfs |

16 |

2444 |

9 |

+++++ |

+++ |

3114 |

9 |

3093 |

11 |

+++++ |

+++ |

3057 |

9 |

| Latency |

18923us |

|

127us |

|

15160us |

|

10076us |

|

9us |

|

11312us |

|

| ext4 |

16 |

1407 |

2 |

+++++ |

+++ |

1400 |

2 |

1572 |

2 |

+++++ |

+++ |

1393 |

2 |

| Latency |

1117ms |

|

439us |

|

18831us |

|

18800us |

|

6us |

|

19506us |

|

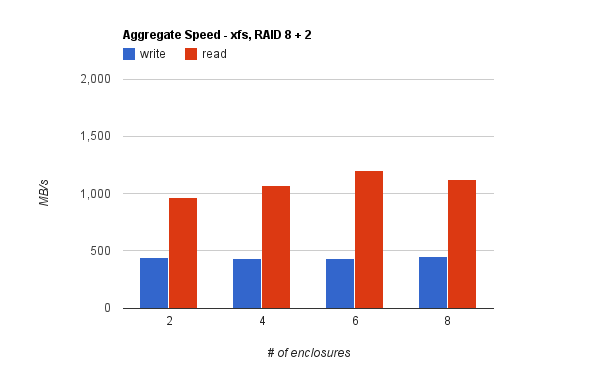

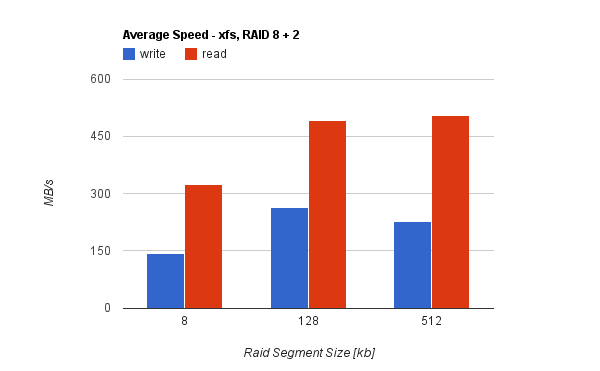

dd

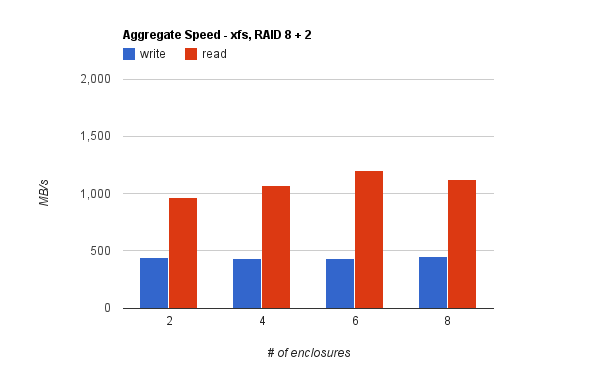

- Num of enclosures vs. speed xfs:

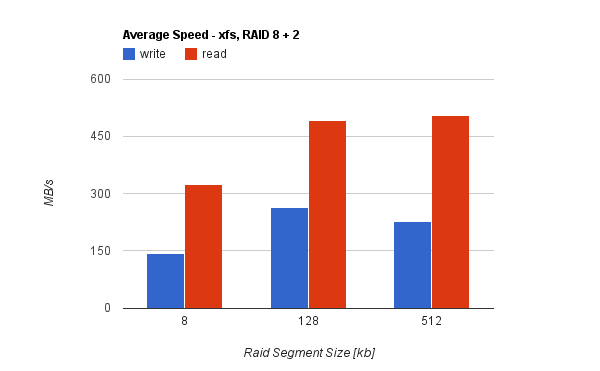

- RAID Segment Size Comparison - Average Single RAID speed:

- RAID Segment Size Comparison - Aggregate RAID speed:

GPFS

For testing the GPFS performance we used three benchmarks. The first writes and reads big files using dd. The second compiles gcc to see the stability and performance with small files. The last is a single bonnie++ run of 100GB.

- GCC test:

- cp gcc-3.4.6.tar.bz2 from local disk to GPFS

- untar

- configure

- make depclean

-

Configuration 2a:

- 1 Data/Metadata server with 4 LUNs (RAID 4 + 1) for data and SSD PCI-X card for meta data

- 2 Data servers 4 LUNs (RAID 4 + 1) each

-

- Mar 04 09:58 [root@gpfs01:gpfs]# mmlsconfig

- Configuration data for cluster scratch.ib.lcg.cscs.ch:

- ------------------------------------------------------

- clusterName scratch.ib.lcg.cscs.ch

- clusterId 10717238835674925567

- autoload no

- minReleaseLevel 3.3.0.2

- dmapiFileHandleSize 32

- pagepool 2048M

- nsdbufspace 15

- nsdMaxWorkerThreads 96

- maxMBpS 3200

- maxFilesToCache 60000

- worker1Threads 500

- subnets 148.187.70.0 148.187.71.0

- prefetchThreads 550

- minMissedPingTimeout 300

- leaseDuration 240

- verbsRdma enable

- verbsPorts mlx4_0

- failureDetectionTime 360

- adminMode central

-

- File systems in cluster scratch.ib.lcg.cscs.ch:

- -----------------------------------------------

- /dev/scratch

Configuration 3:

- 1 Data/Metadata server with 4 LUNs (RAID 4 + 1) for data and 2 raid0 sas internal drives

- 2 Data servers 4 LUNs (RAID 4 + 1) each

dd writes basically the same, assume reads to be the same as well

Configuration 4:

- 3 mixed Data/Metadata servers 4 LUNs (RAID 4 + 1) each

-

- Mar 04 09:58 [root@gpfs01:gpfs]# mmlsconfig

- Configuration data for cluster scratch.ib.lcg.cscs.ch:

- ------------------------------------------------------

- clusterName scratch.ib.lcg.cscs.ch

- clusterId 10717238835674925567

- autoload no

- minReleaseLevel 3.3.0.2

- dmapiFileHandleSize 32

- pagepool 2048M

- nsdbufspace 15

- nsdMaxWorkerThreads 96

- maxMBpS 3200

- maxFilesToCache 60000

- worker1Threads 500

- subnets 148.187.70.0 148.187.71.0

- prefetchThreads 550

- minMissedPingTimeout 300

- leaseDuration 240

- verbsRdma enable

- verbsPorts mlx4_0

- failureDetectionTime 360

- adminMode central

-

- File systems in cluster scratch.ib.lcg.cscs.ch:

- -----------------------------------------------

- /dev/scratch

Configuration 5:

- 3 mixed Data/Metadata servers 4 LUNs (RAID 4 + 1) each

-

- Mar 04 14:12 [root@gpfs01:gpfs]# mmlsconfig

- Configuration data for cluster scratch.ib.lcg.cscs.ch:

- ------------------------------------------------------

- clusterName scratch.ib.lcg.cscs.ch

- clusterId 10717238835674925567

- autoload no

- minReleaseLevel 3.3.0.2

- dmapiFileHandleSize 32

- pagepool 2048M

- nsdbufspace 30

- nsdMaxWorkerThreads 36

- maxMBpS 1600

- maxFilesToCache 10000

- worker1Threads 48

- subnets 148.187.70.0 148.187.71.0

- prefetchThreads 72

- verbsRdma enable

- verbsPorts mlx4_0

- nsdThreadsPerDisk 3

- adminMode central

-

- File systems in cluster scratch.ib.lcg.cscs.ch:

- -----------------------------------------------

- /dev/scratch

- mmcrfs scratch -F sdk.dsc.all -A no -B 1M -D posix -E no -j cluster -k all -K whenpossible -m 2 -M 2 -r 1 -R 1 -n 25 -T /gpfs

- -A automount

- -B blocksize

- -j where to write data, should probably change to scatter

- -K duplicates

- -m min number of metadata copies

- -M max num of metadata copies

- -r -R min and max num of data copies

- -n number of nodes

- -T mountpoint name

Monitoring

Instructions about monitoring the hardware

Power Consumption

Raid Sanity

Other?

Manuals

External links to manuals

Issues

Information about issues found with this hardware, and how to deal with them

Issue1

Issue2