Swiss Grid Operations Meeting on 2014-11-06

- Date and time: First Thursday of the month, at 14:00

- Place: Vidyo (room: Swiss_Grid_Operations_Meeting, extension: 9305236)

- External link: http://vidyoportal.cern.ch/flex.html?roomdirect.html&key=gDf6l4RlIAGN

- Phone gate: From Switzerland: 0227671400 (portal) + 9305236 (extension) + # (pound sign)

- IRC chat: irc:gridchat.cscs.ch:994#lcg

(ask pw via email)

(ask pw via email)

Site status

CSCS

- Operations

- GPFS

- seems to have been stable since change in config to cache more files.

- Space usage/ cleanup under control - Job epilogs + Periodic cleaning via cron.

- Jobs

- Occasionally see CMS jobs forking excessively, not seen this in a few days. Likely a user/ transient issue.

- GPFS

- ARGUS

- Installed and currently testing

- To be deployed on 12/11/2014

- SE:

- Storage to replace ageing IBM x3650M3 and DCS1500 in place, currently under test. New hardware is 2x (2x HP ProLiant DL380p Gen8 + 1x Netapp E5500 controller pair + 2x Netapp storage enclosures, total 540TB)

- Old Sun HW:

- All machines have a dual port QDR Infiniband card

- 3x Sun X4270 servers: 2x PSU, 2x X5570 @ 2.93GHz, 2x 146GB 10k SAS Hitachi drives - MDS[01-02], XEN13

- 8x Sun X4250 Servers: 2x PSU, 2x X5570 @ 2.93GHz, 2x 146GB 10k SAS Hitachi drives, 2x dual port SAS cards - OSS[1-4][1-2]

- 2x Sun X4250 Servers: 2x PSU, 2x E5450 @ 3GHz, 4x 146GB 10k SAS Hitachi drives - PERFSONAR01, PERFSONAR02

- 2x Sun X4140 Servers: 2x @ 2.3Ghz, 2x 146GB 10k SAS Hitachi drives - PPNFS, XEN01

- 8x Sun J4400 JBODs: 24x 500GB 7.2K SATA Hitachi drives

- 1x Sun J4200 JBODs: 24x 300GB 15K SAS Hitachi drives (drives are 3.5")

- We need to know ASAP if any Swiss institution wants any of this hardware.

- CSCS A.O.B.

- Worked heavily on replacing faulty IB cards and cables. Found that IB/eth bridges don't support FDR downlinks to FDR switches.

- Worked intensively on replacing current provisioning system (testing Foreman with Puppet & CFEngine for old systems). Foreman seems a good alternative.

- Average A/R for CSCS in the range of >95% for October. CSCS is happy about this because it's been a difficult period with too many HW interventions and failures.

- Maintenance planification:

- Next Phoenix maintenance on 12/11/2014. ARGUS deployment mostly.

- Extra maintenance on 3/12/2014. CSCS uplink upgrade to 100Gbit/s and general network unavailability during whole day (likely 8:00-20:00 CET)

PSI

- Nagios 4.0.8

- I've been a Nagios 4 speaker at Monitoring and reporting in HPC

in Bern

in Bern

- NagVis

, check_mk

, check_mk , pnp4nagios

, pnp4nagios , nagstamon

, nagstamon

- I've been a Nagios 4 speaker at Monitoring and reporting in HPC

- Deployed cvmfs

+ its Nagios checks

+ its Nagios checks

- Studying Python Pandas

, for instance to run a SQL query and plot the result

, for instance to run a SQL query and plot the result

- In Dec there will be our yearly PSI, UniZ, ETHZ meeting to assess the T3 status and plan its evolution ; I'm busy making slides and preparing the agenda

- Testing

gfalFS- mainly to browse and recursively delete dirs from a SE ; also to ( slowly, but easily ) copy dirs from a SE to an other SE

- with the latest RPMs More... Close

gfal-1.16.0-1.el6.x86_64 gfal2-2.8.0-r458.x86_64 gfal2-all-2.8.0-r458.x86_64 gfal2-core-2.8.0-r458.x86_64 gfal2-debuginfo-2.8.0-r458.x86_64 gfal2-devel-2.8.0-r458.x86_64 gfal2-doc-2.8.0-r458.noarch gfal2-plugin-dcap-2.8.0-r458.x86_64 gfal2-plugin-dropbox-0.0.1-r13.x86_64 gfal2-plugin-dropbox-debuginfo-0.0.1-r13.x86_64 gfal2-plugin-gridftp-2.8.0-r458.x86_64 gfal2-plugin-http-2.8.0-r458.x86_64 gfal2-plugin-lfc-2.8.0-r458.x86_64 gfal2-plugin-mock-2.8.0-r458.x86_64 gfal2-plugin-rfio-2.8.0-r458.x86_64 gfal2-plugin-srm-2.8.0-r458.x86_64 gfal2-plugin-xrootd-0.3.3-r57.x86_64 gfal2-plugin-xrootd-debuginfo-0.3.3-r57.x86_64 gfal2-python-1.6.0-r443.x86_64 gfal2-python-debuginfo-1.6.0-r443.x86_64 gfal2-python-doc-1.6.0-r443.noarch gfal2-transfer-2.8.0-r458.x86_64 gfal2-util-1.1.0-r113.noarch gfalFS-1.5.0-r373.x86_64

available from .repo I was able to run

I was able to run rsyncbetween two SEs ( but for big dirs it crashed ): More... Close

): More... Close $ gfalFS -s gfalfs-t3se01 srm://t3se01.psi.ch $ gfalFS -s gfalfs-storage01 srm://storage01.lcg.cscs.ch $ rsync -rv --temp-dir=/tmp/martinel_rsync gfalfs-t3se01/pnfs/psi.ch/cms/t3-nagios gfalfs-storage01/pnfs/lcg.cscs.ch/cms/trivcat/store/user/martinelli_f/ sending incremental file list t3-nagios/ t3-nagios/1MB-test-file_pool_t3fs01_cms t3-nagios/1MB-test-file_pool_t3fs02_cms ... sent 38184484 bytes received 700 bytes 286031.34 bytes/sec total size is 38177712 speedup is 1.00

-

$ strace -e open cp -rvn gfalfs-t3se01/pnfs/psi.ch/cms/trivcat/store/user/mangano gfalfs-storage01/pnfs/lcg.cscs.ch/cms/trivcat/store/user/martinelli_f/works

- classical way to copy files between two SEs is by FTS3

; I should study its Python API

; I should study its Python API

- Studying http://www.dcache.org/manuals/upgrade-2.10/upgrade-2.6-to-2.10.html

( but low priority )

( but low priority )

UNIBE-LHEP

- Operations

- Smooth routine operations with minor issues:

- a-rex crashes (x2) on ce02 this time round (it happens randomly on both clusters)

- one more campaign to revive defective/crashed nodes: recovered ~25% on each cluster (with another ~25% respectively still down). Now changed number of job slots on ce01 nodes (phaseC Sun Blades) to 12 (24GB of RAM per node) from 16

- kvm host crashed once (when launching virt-manager). This hosts ce03 that submits to CSCS Todi. Swift rescue of the server, no running jobs were affected

- deployed new SLC6 NFS server for local home directories to replace 7-year old (very trusty) SLC5 server

- we are only left with one SLC5 server: DPM head node with site-bdii

- Smooth routine operations with minor issues:

- ATLAS specific operations

- smooth routine operation, except some days around mid-october:

- ce01: many nodes went into bad states (likely because of memory starving). Many jobs still in running state in gridnegine, although on dead nodes. Other nodes left with large numbers of athena.py orphaned processes, while no corresponding jobs were running on the nodes. General manual cleanup of the mess. Pile-up jobs likely to be liable for the mayhem (used twice expect vmem, general ATLAS problem, not site specific)

- ce02: many nodes likely went into limbo and came back with no mounts from fstab, including lustre. Again, manula cleanup. Caused very likely by the same type of jobs

- Some jobs randomly turn into black holes, not understood yet (they pass all the basic sanity checks). Offlined for now

- More last-minute vmem properly. Jobs killed (as they should) when limit reached. Limit on our clusters is twice the job request. So likely kob request is inadequate. Clarifying with ATLAS

- Upgrade to ARC 4.2.0-1.el6 from 4.1.0-1.el6 on both CEs (here is a bug in 4.1.0 that causes the jblink directories to remain permanently, causing the cache eventually to fill up)

- HammerCloud gangarobot: http://hammercloud.cern.ch/hc/app/atlas/siteoverview/?site=UNIBE-LHEP&startTime=2014-10-01&endTime=2014-10-31&templateType=isGolden

- NOTE: this link has CHANGED: SAM Nagios ATLAS_CRITICAL: http://wlcg-sam-atlas.cern.ch/templates/ember/#/plot?flavours=SRMv2%2CCREAM-CE%2CARC-CE&group=All%20sites&metrics=org.sam.CONDOR-JobSubmit%20%28%2Fatlas%2FRole_lcgadmin%29%2Corg.atlas.WN-swspace%20%28%2Fatlas%2FRole_pilot%29%2Corg.atlas.WN-swspace%20%28%2Fatlas%2FRole_lcgadmin%29%2Corg.atlas.SRM-VOPut%20%28%2Fatlas%2FRole_production%29%2Corg.atlas.SRM-VOGet%20%28%2Fatlas%2FRole_production%29%2Corg.atlas.SRM-VODel%20%28%2Fatlas%2FRole_production%29&profile=ATLAS_CRITICAL&sites=CSCS-LCG2%2CUNIBE-LHEP%2CUNIGE-DPNC&status=MISSING%2CUNKNOWN%2CCRITICAL%2CWARNING%2COK

- smooth routine operation, except some days around mid-october:

UNIBE-ID

- Procurement

- 16 new compute nodes from Dalco AG, physically installed two days ago. First bunch of Dalco nodes. Ingredients (pretty much standard stuff):

- DALCO r2264i4t 2U Chassis with 4 nodes

- Each node supports:

- 2x 8C Intel Xeon E5-2650v2 2.6GHz

- 128GB 1866MHz DDR ECC REG (8*16GB)

- 1x 1TB 7.2k rpm SATA 6.0Gb/s

- 2x Gigabit-Ethernet onboard

- Infiniband ConnectX-3 QDR HCA

- 16 new compute nodes from Dalco AG, physically installed two days ago. First bunch of Dalco nodes. Ingredients (pretty much standard stuff):

- Operations

- smooth and reliable; no issues, no maintenance down

- Updated ARC from 4.1.0 to 4.2.0 without any issue

UNIGE

- Ordered and received two disk servers for the AMS space

- IBM 3630 M4, 42 TB net per machine

- Looking for a practical way to copy AMS data

- xrdcp with grid credentials

- or mount the space at a machine at CERN

- FLARE funding request (two years starting Spring 2015)

- a lot of hardware replacement

- Two transient performance issues

- the VM running the ARC front-end

- the /cvmfs over NFS

- Failing transfers due to routing problems

- routing from the LHCOne in the US to Geneva went via CERN, not via SWITCH, fixed now

- Tests from GridKa were failing for a while

- voms settings for the ops vo, fixed now

- ATLAS operations were not affected

NGI_CH

- Please update NGI_CH ARGUS ticket with progress: https://xgus.ggus.eu/ngi_ch/?mode=ticket_info&ticket_id=284

Other topics

- Topic1

- Topic2

A.O.B.

Attendants

- CSCS: Miguel Gila, George Brown

- CMS: Fabio Martinelli, Daniel Meister

- ATLAS: Apologies: Gianfranco Sciacca (Site report given above), Szymon Gadomski (maybe a little late), Michael Rolli (Site report given above)

- LHCb: Roland Bernet

- EGI: Apologies: Gianfranco Sciacca

Action items

- Item1

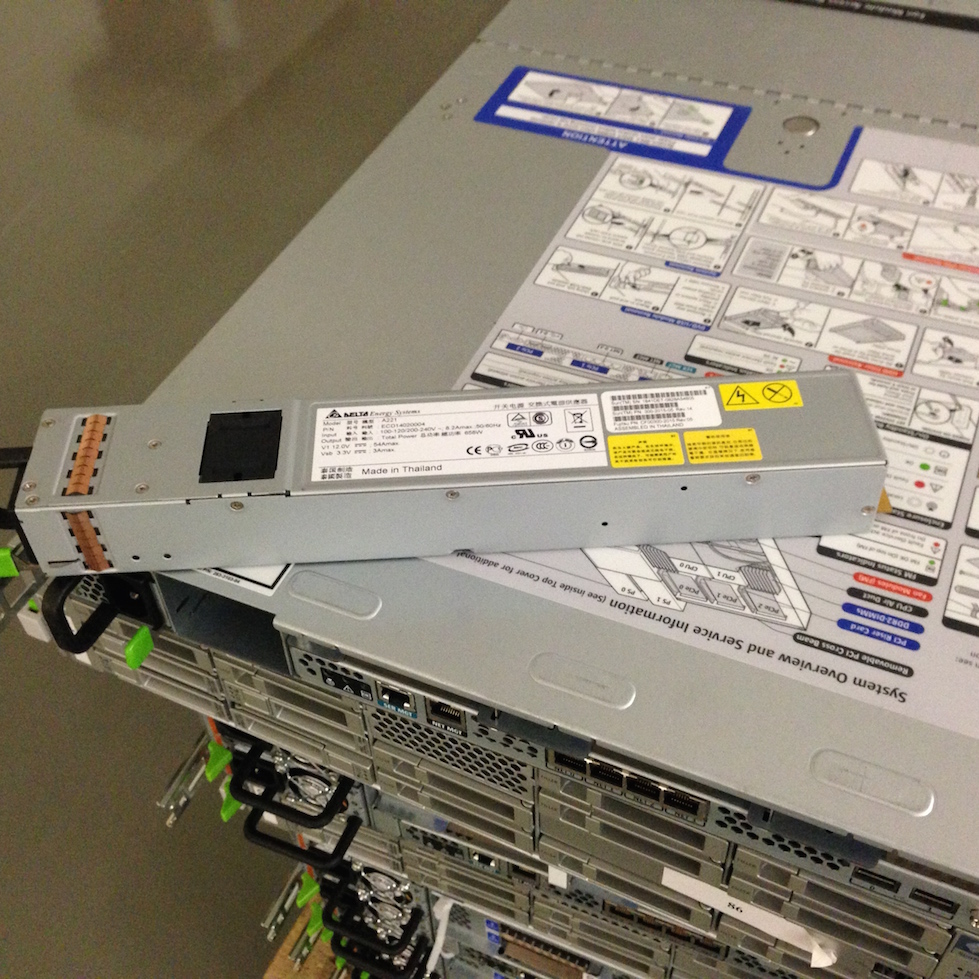

- 1U PSU:

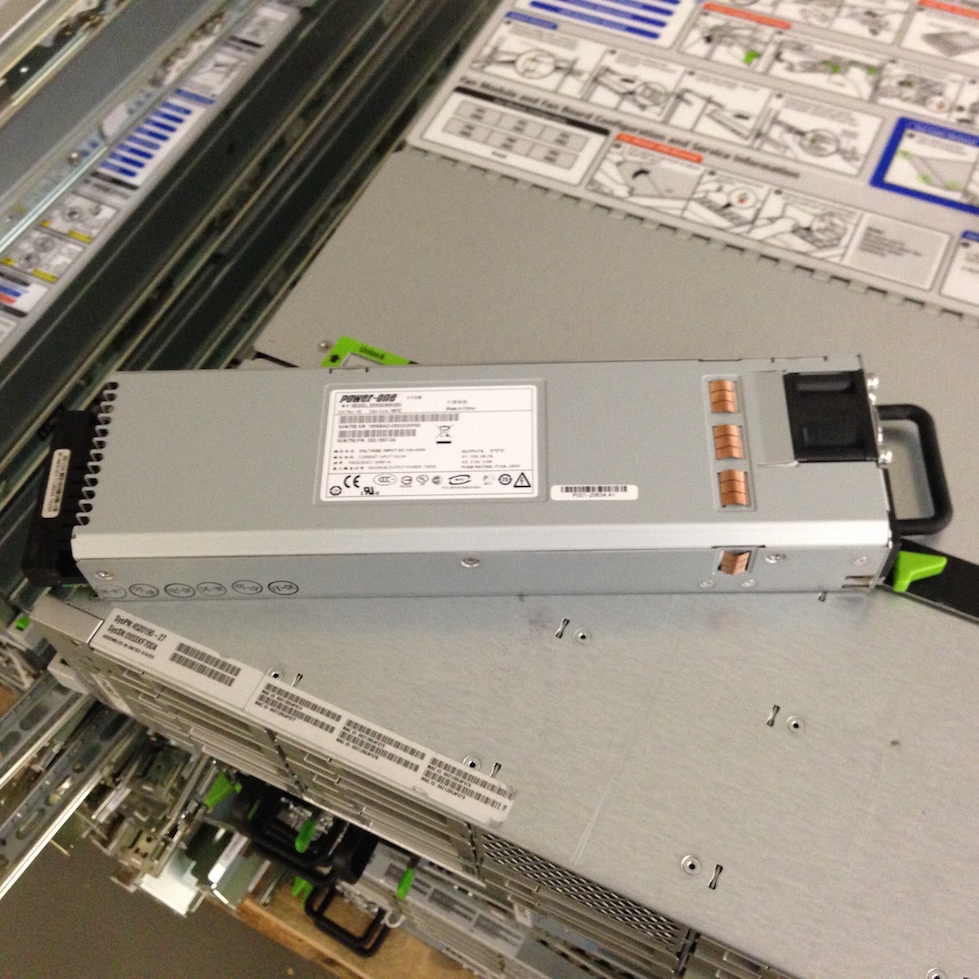

- 2U PSU:

| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

IMG_6968.jpg | r2 r1 | manage | 470.0 K | 2014-11-06 - 15:10 | MiguelGila | 1U PSU |

| |

IMG_6970.jpg | r1 | manage | 425.2 K | 2014-11-06 - 15:11 | MiguelGila | 2U PSU |

Topic revision: r23 - 2014-11-14 - GeorgeBrown

|

Warning: Can't find topic "".""

|

|

|

Ideas, requests, problems regarding TWiki? Send feedback