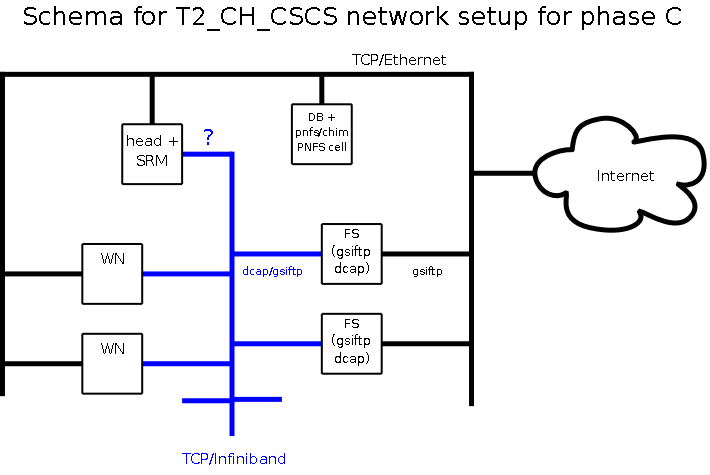

Schema and discussion for network / dCache setup in Phase C

network/dCache services schema

This will be updated as the discussion progresses.

basic situation

Since computing nodes have more and more cores (our current system has 4CPUs=16 cores per node), network and local disks are beginning to become limiting. In blades you often only have space for 2 disks, and mostly you only attach one eth connection. For this reason, we want to use the (now affordable) infiniband technology for the main internal data flows. Worker nodes will use a shared FS (Lustre) for scratch, and we also want to attach the storage element through TCP over Infiniband. On our current system, we see heavy WN to SE traffic via dcap, and also (mainly for ATLAS) heavy stage-in activity via dcap (dccp) and gsiftp. ATLAS in the meantime said the would refrain from using dccp, because this created havoc (usually we allow a large number of dcap movers per pool). Our current dcache system is running with 28 Sun X4500 ("Thumper") Solaris systems ( 24 TB each) as file servers, with two linux nodes for the dcache services. EveryThumper also runs dcap and gsiftp doors. These have performed very well. Never saw load based problems. Now we will replace these servers with 28 successor models Sun X4540 ("Thors") with 48 TB each. All machines - fileservers and worker nodes (WNs) will have ethernet interfaces as well. We want to have the dcap communication between the WNs (960 cores) and the Storage to go via Infiniband. But the system must also be available from the internet for remote SRM/gsiftp transfers. SRM and gsiftp also need to be available from the worker nodes for stageout/stagein by jobs.Preliminary discussion by mail

2009-07-21 Patrick Fuhrmann to DF

Hi Derek, Making a long story short : Having two interfaces and having clients coming through both interfaces is very troublesome and is not possible right now in all cases. !!! So it's important to know which protocols you intend to use from inside resp. outside ? The only protocol which recently has been enabled for the two-interface use case, is the grid-ftp protocol. The gridftp mover in the pool will (in server passive mode) send the IP number of the correct interface to the client. Ddcap/xroot however, will return the IP number of the primary interface only. On the translation of the SURL to the TURL, done by the SRM : The SURL is composed of the reverse lookup of the IP address of the door. If there are multiple interfaces, dCache will use the external one preferred to a '192....' like address. So the TURL is difficult to determine. This is the current situation. For xroot and dcap it seems (talking to the experts) we can get this improved before the golden release (1.9.5) is due end of September. For the SRM stuff we need to investigate. This is very unlikely to be available in 1.9.5. Hope this helps a little bit in deciding. If you like we may have a short phone conference this week on the matter allowing you to describe the use in more depth. cheers patrick2009-07-21 DF to Patrick Fuhrmann

Based on what I see from your mail, I think that one could have a solution where we have dcap doors on all file servers, which only respond on the infiniband addresses to the infiniband network. We also seem to be able to keep the gsiftp doors on the file servers, since they will be able to cater via the Infiniband to the worker nodes, as well as via the ethernet to the outside. This would be fantastic, and just what we need. The SRM seems to be a bit problematic... So, we may have the situation where a worker node client using SRM will receive the ethernet address of the file server. This still would work, but the transfer would then happen through the ethernet connection between the WN and the pool servers. -- DerekFeichtinger - 22 Jul 2009| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

t2cscs-network-phaseC.png | r1 | manage | 14.5 K | 2009-07-22 - 09:56 | DerekFeichtinger |

Topic revision: r1 - 2009-07-22 - DerekFeichtinger

Ideas, requests, problems regarding TWiki? Send feedback