Service Card for CREAM-CE/ARGUS

Definition

The CREAM-CE system is the entry point for jobs in a WLCG site. User submit jobs to CREAM-CEs and they are responsible for interacting with the internal queue and scheduling systems of the site. ARGUS is an authorization and authentication framework used by CREAM-CEs and by other grid services (in the future) to authorize and authenticate user interactions with the site elements. To make it simple, here is a quick explanation:

The CREAM-CE system is the entry point for jobs in a WLCG site. User submit jobs to CREAM-CEs and they are responsible for interacting with the internal queue and scheduling systems of the site. ARGUS is an authorization and authentication framework used by CREAM-CEs and by other grid services (in the future) to authorize and authenticate user interactions with the site elements. To make it simple, here is a quick explanation: - User submits a job from his/her local User Interface (UI) to any of the CREAM-CE at CSCS.

- In the submit process, before accepting the job, CREAM-CE queries ARGUS to find out whether the user is authorized to use the resources specified (job submit).

- If the user is accepted, CREAM-CE allows the job to enter and submits it to the batch system (SLURM).

- cream01.lcg.cscs.ch

- cream02.lcg.cscs.ch

- cream03.lcg.cscs.ch

- cream04.lcg.cscs.ch (only CREAM-CE, not in production as defined in GOCDB)

argus.lcg.cscs.ch

Currently, these machines are on 2 IBM servers and 2 VMs: - Scientific Linux 6.4 x86_64

- SL IB stack.

- UMD 3 CREAM-CE and ARGUS software

- GPFS 3.5.x client

Operations

Client tools

- For CREAM-CE the client tools available are based on regular

glite-ce-job-*executables and are located in the UI (ui.lcg.cscs.ch). - For ARGUS, using the pap administration console it is possible to control the behaviour of a live Argus service as well as tunning the settings for next time the service reboots. It is located in

/usr/bin/pap-adminand can be managed asrootfrom each of the argus servers or from any UI, logged as an user with the following certificates loaded:"/DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=hosts/C=CH/ST=Zuerich/L=Zuerich/O=ETH Zuerich/CN=argus01.lcg.cscs.ch" : ALL "/DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=hosts/C=CH/ST=Zuerich/L=Zuerich/O=ETH Zuerich/CN=argus02.lcg.cscs.ch" : ALL "/DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=users/C=CH/O=ETH Zuerich/CN=Miguel Angel Gila Arrondo" : ALL "/DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=users/C=CH/O=ETH Zuerich/CN=Pablo Fernandez" : ALL

Testing

CREAM-CE

To test the CREAM-CE service we have developed a scriptchk_CREAMCE that contacts the CREAM, submits a job and fetches the output once it's done. To use it, log in the UI ( ui.lcg.cscs.ch) and while having a valid certificate loaded, run /opt/cscs/bin/chk_CREAMCE

$ /opt/cscs/bin/chk_CREAMCE -c cream03 -q cream-slurm-cscs -r 'hostname; sleep 10s; lcg-ls -l srm://storage01.lcg.cscs.ch/pnfs/lcg.cscs.ch/dteam/' ******************************************* CREAM-CE Testing Tools ******************************************* Version: 0.90 Running as user: miguelgi Current dir: /home/miguelgi/testjobs/jobs/gcc_thousand_jobs Info level: 0 TestID: ui.lcg.cscs.ch-1208071153639735000 BDII: bdii.lcg.cscs.ch User certificate: /DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=users/C=CH/O=ETH Zuerich/CN=Miguel Angel Gila Arrondo/CN=proxy VO: dteam CREAM-CEs to query: cream03 Job type: remote command *** Starting job in 5 seconds, press Ctrl+C to cancel *** [INFO] Creating the file with the job description [INFO] Creating temporary job =remote command= file in /tmp/job_test.17301.jdl [INFO] Processing host cream03 [INFO] The selected queue (cream-slurm-cscs) does not match the published queue for this vo on this host (cream03.lcg.cscs.ch:8443/cream-slurm-other). [INFO] Selected Queue chosen for this host: cream-slurm-cscs [INFO] Launching test job to cream03 *** Submitting job in 5 seconds, press Ctrl+C to cancel *** [INFO] Submitting job... [INFO] The JOBID is this: https://cream03.lcg.cscs.ch:8443/CREAM203229534 [INFO] Waiting for the job to be finished. [................................] [INFO] Job done [INFO] The job finished with the [DONE-OK] status. [DONE-OK] Source: gsiftp://cream03.lcg.cscs.ch/cream_localsandbox/data/dteam/_DC_com_DC_quovadisglobal_DC_grid_DC_switch_DC_users_C_CH_O_ETH_Zuerich_CN_Miguel_Angel_Gila_Arrondo_dteam_Role_NULL_Capability_NULL_dteam044/20/CREAM203229534/OSB// Dest: file:///tmp/ stderr.out -> stderr.ui.lcg.cscs.ch-1208071153639735000.7441 Source: gsiftp://cream03.lcg.cscs.ch/cream_localsandbox/data/dteam/_DC_com_DC_quovadisglobal_DC_grid_DC_switch_DC_users_C_CH_O_ETH_Zuerich_CN_Miguel_Angel_Gila_Arrondo_dteam_Role_NULL_Capability_NULL_dteam044/20/CREAM203229534/OSB// Dest: file:///tmp/ stdout.out -> stdout.ui.lcg.cscs.ch-1208071153639735000.7442 [INFO] Contents of stdout: -------------------------------------------------------------------- wn47.lcg.cscs.ch -rw-r--r-- 1 2 2 51200 ONLINE /pnfs/lcg.cscs.ch/dteam//automatic_test-20120616-1331-22850 * Checksum: 15552c72 (adler32) * Space tokens: 21171483 -rw-r--r-- 1 2 2 1458063982 ONLINE /pnfs/lcg.cscs.ch/dteam//SPEC2006_v11.tar.bz2 * Checksum: 3e51665d (adler32) * Space tokens: 13913515 -rw-r--r-- 1 2 2 801512 ONLINE /pnfs/lcg.cscs.ch/dteam//todelete1 * Checksum: 2d455bf4 (adler32) * Space tokens: 11050745 drwxrwxr-x 1 2 2 0 UNKNOWN /pnfs/lcg.cscs.ch/dteam//generated -------------------------------------------------------------------- [INFO] Contents of stderr: -------------------------------------------------------------------- -------------------------------------------------------------------- [INFO] Cleaning up /tmp/stdout.ui.lcg.cscs.ch-1208071153639735000.7442 [INFO] Cleaning up /tmp/stderr.ui.lcg.cscs.ch-1208071153639735000.7441 [INFO] Done with cream03 [INFO] The final result for cream03 is [DONE-OK] [INFO] Cleaning up /tmp/job_test.17301.jdl [INFO] Cleaning up all ui.lcg.cscs.ch-1208071153639735000 filesAdditionally, more complex tests can be executed. With

/opt/cscs/bin/chk_CREAMCE -h you can get a list of options and tests available.

A couple of useful examples:

$ chk_CREAMCE -q cream-slurm-cscs -c cream04.lcg.cscs.ch -r 'echo "*** CURRENT DIR"; pwd; ls -lhart;echo "*** HOME"; echo "$HOME"; ls -lhart "$HOME"; env |sort'

$ chk_CREAMCE -q cream-slurm-cscs -c cream02.lcg.cscs.ch -r 'echo "*** CURRENT DIR"; pwd; ls -lhart;echo "*** HOME"; echo "$HOME"; ls -lhart "$HOME"; env |sort'

In addition to this, we can see whether the services are up using the grid-service or grid-service2 scripts and/or check the system logs from any of the cream hosts.

# grid-service2 status ARGUS-CREAM-CE hybrid node (SLURM) ---------------------------------------------- Running status on ARGUS service. Checking /etc/grid-security/gridmapdir... OK Checking /opt/edg/var/info... OK Checking /var/log/apel... OK *** ARGUS-PAP daemon *** PAP running! *** ARGUS-PDP daemon *** argus-pdp is running... *** ARGUS-PEP daemon *** argus-pepd is running... Running status on CREAM-CE service. Checking /etc/grid-security/gridmapdir... OK Checking /opt/edg/var/info... OK Checking /var/log/apel... OK Checking if GPFS is mounted... OK mysqld (pid 16434) is running... *** glite-ce-blah-parser: BNotifier (pid 16478) is running... BUpdaterSLURM (pid 16490) is running... *** tomcat6: tomcat6 (pid 16557) is running... [ OK ] *** glite-lb-locallogger: glite-lb-logd running as 16616 glite-lb-interlogd running as 16643 globus-gridftp-server (pid 16666) is running... BDII Runnning [ OK ] slurmd (pid 16764) is running...

ARGUS

As done with the CREAM-CE service, we have developed a scriptchk_ARGUS that runs basic tests on the ARGUS service. From the UI ( ui.lcg.cscs.ch) with the certificate loaded, run:

$ chk_ARGUS ******************************************* ARGUS Testing Tools ******************************************* Version: 0.1 Running as user: miguelgi Current dir: /home/miguelgi Info level: 0 TestID: ui.lcg.cscs.ch-1208071156283764000 User certificate: /DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=users/C=CH/O=ETH Zuerich/CN=Miguel Angel Gila Arrondo/CN=proxy ARGUS Server to query: cream01.lcg.cscs.ch ARGUS QUERY URL: https://cream01.lcg.cscs.ch:8154/authz *** Starting job in 5 seconds, press Ctrl+C to cancel *** [INFO] Testing ARGUS glexec Resource: http://authz-interop.org/xacml/resource/resource-type/wn Decision: Permit Obligation: http://glite.org/xacml/obligation/local-environment-map/posix (caller should resolve POSIX account mapping) Username: dteam044 Group: dteam Secondary Groups: dteam [INFO] Done with glexec on cream01.lcg.cscs.ch [INFO] The result for GLEXEC on cream01.lcg.cscs.ch seems to be [OK] [INFO] Testing ARGUS CREAM-CE Resource: http://lcg.cscs.ch/xacml/resource/resource-type/creamce Decision: Permit Obligation: http://glite.org/xacml/obligation/local-environment-map/posix (caller should resolve POSIX account mapping) Username: dteam044 Group: dteam Secondary Groups: dteam [INFO] Done with creamce on cream01.lcg.cscs.ch [INFO] The result for CREAMCE on cream01.lcg.cscs.ch seems to be [OK]Additionally, we can use

grid-service and grid-service2 to test the status of the services:

# grid-service2 status ARGUS-CREAM-CE hybrid node (SLURM) ---------------------------------------------- Running status on ARGUS service. Checking /etc/grid-security/gridmapdir... OK Checking /opt/edg/var/info... OK Checking /var/log/apel... OK *** ARGUS-PAP daemon *** PAP running! *** ARGUS-PDP daemon *** argus-pdp is running... *** ARGUS-PEP daemon *** argus-pepd is running... Running status on CREAM-CE service. Checking /etc/grid-security/gridmapdir... OK Checking /opt/edg/var/info... OK Checking /var/log/apel... OK Checking if GPFS is mounted... OK mysqld (pid 16434) is running... *** glite-ce-blah-parser: BNotifier (pid 16478) is running... BUpdaterSLURM (pid 16490) is running... *** tomcat6: tomcat6 (pid 16557) is running... [ OK ] *** glite-lb-locallogger: glite-lb-logd running as 16616 glite-lb-interlogd running as 16643 globus-gridftp-server (pid 16666) is running... BDII Runnning [ OK ] slurmd (pid 16764) is running...Ultimately, we can check that there are policies stored on the pap daemon:

# pap-admin lp -all

default (local):

resource "http://authz-interop.org/xacml/resource/resource-type/wn" {

obligation "http://glite.org/xacml/obligation/local-environment-map" {

}

action "http://glite.org/xacml/action/execute" {

rule permit { vo="cms" }

rule permit { vo="atlas" }

rule permit { vo="dech" }

rule permit { vo="dteam" }

rule permit { vo="hone" }

rule permit { vo="lhcb" }

rule permit { vo="ops" }

rule permit { vo="vo.gear.cern.ch" }

}

}

resource "http://lcg.cscs.ch/xacml/resource/resource-type/creamce" {

obligation "http://glite.org/xacml/obligation/local-environment-map" {

}

action ".*" {

rule permit { vo="cms" }

rule permit { vo="atlas" }

rule permit { vo="dech" }

rule permit { vo="dteam" }

rule permit { vo="hone" }

rule permit { vo="lhcb" }

rule permit { vo="ops" }

rule permit { vo="vo.gear.cern.ch" }

}

}

Failover check

Checking logs

Logs are in/var/log/: - For CREAM-CE service in

/var/log/cream - For ARGUS sevice in

/var/log/argus/{pap,pdp,pepd}:/var/log/argus/pap/pap-standalone.log /var/log/argus/pdp/access.log /var/log/argus/pdp/process.log /var/log/argus/pepd/audit.log /var/log/argus/pepd/process.log

- Other important logs are

/var/log/globus-gridftp.log /var/log/messages /var/log/tomcat6/

Set up

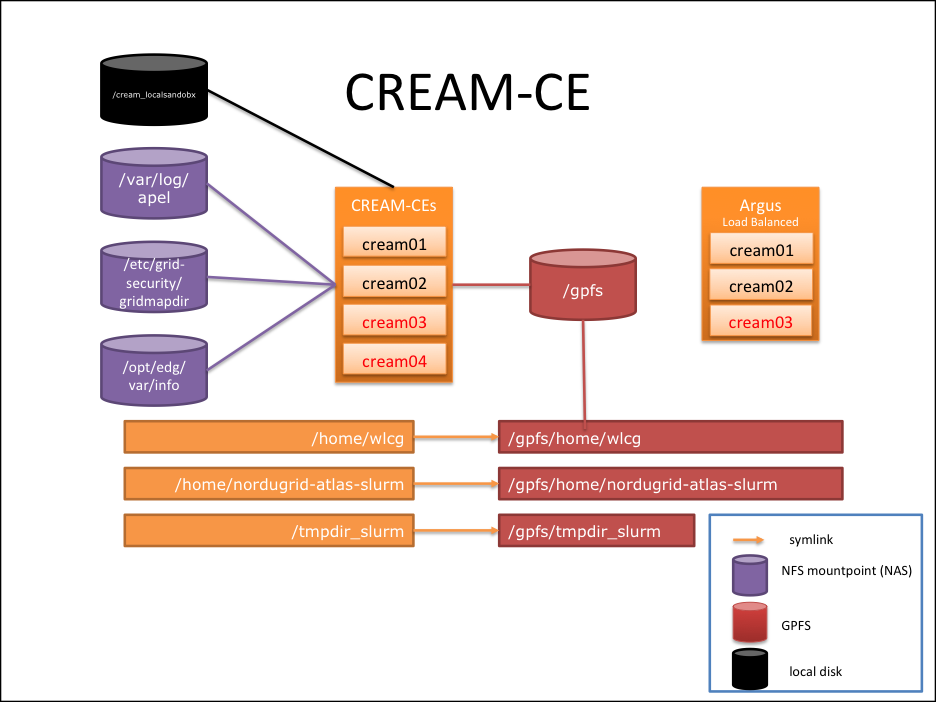

Dependencies (other services, mount points, ...)

For this service to work properly, machines depend on LRMS service, NAS and GPFS. Therefore, you must check that these filesystems are mounted:nas.lcg.cscs.ch:/ifs/LCG/shared/gridmapdir on /etc/grid-security/gridmapdir type nfs (rw,bg,proto=tcp,rsize=32768,wsize=32768,soft,intr,nfsvers=3,addr=148.187.66.70) nas.lcg.cscs.ch:/ifs/LCG/shared/vo_tags on /opt/edg/var/info type nfs (rw,bg,proto=tcp,rsize=32768,wsize=32768,soft,intr,nfsvers=3,addr=148.187.66.66) nas.lcg.cscs.ch:/ifs/LCG/shared/apelaccounting on /var/log/apel type nfs (rw,bg,proto=tcp,rsize=32768,wsize=32768,soft,intr,nfsvers=3,addr=148.187.66.67) /dev/gpfs on /gpfs type gpfs (rw,nomtime,dev=gpfs)

- Also the scratch file system from GPFS has to be mounted and linked:

- /home/nordugrid-atlas-slurm -> /gpfs/home/nordugrid-atlas-slurm

- /home/wlcg -> /gpfs/home/wlcg

- /tmpdir_slurm -> /gpfs/tmpdir_slurm

Redundancy notes

There is no redundancy or failover at the CE level.Installation

- These are the installation steps for the software:

# This fetches packages from SL official repos AND phoenix repo. yum install yum-priorities yum-protectbase hwloc slurm-plugins slurm slurm-munge munge-devel munge munge-libs # This fetches packages from SL official repos, phoenix repo AND epel. yum install --enablerepo=epel emi-argus emi-cream-ce emi-slurm-client

- YAIM is done as follows:

/opt/glite/yaim/bin/yaim -c -s /opt/cscs/siteinfo/site-info.def -n creamCE -n SLURM_utils -n ARGUS_server

Assuming to use the correctsite-info.defand relatedyaimconfiguration files.

- The ARGUS configuration is performed similarly to Torque/Moab case, specifically:

# pap-admin add-pap centralbanning argus.cern.ch "/DC=ch/DC=cern/OU=computers/CN=argus.cern.ch" # pap-admin enable-pap centralbanning # pap-admin set-paps-order centralbanning default # pap-admin refresh-cache centralbanning # pap-admin apf /root/argus_policies/cream04.policies.cscs.log # pap-admin lp -all

please note the name of the file used to populate the default policy.

- The file

/usr/libexec/slurm_local_submit_attributes.shneeds to be modified to make sure jobs submitted to the CREAM via specific users (ops, dteam) arrive to the correct reservations. This is an example that may have changed, it's currently updated via cfengine:#!/bin/sh # OPS jobs REGEX="ops[0-9][0-9][0-9]" USER=`whoami` if [[ ( $USER =~ $REGEX ) || ( $USER == 'opssgm' ) || ( $USER == 'cmssgm' ) ]] ; then #if [[ ( $USER =~ $REGEX ) || ( $USER == 'plgridsgm' ) || ( $USER == 'opssgm' ) ]] ; then #echo "#SBATCH --time=2-00:00:00" echo "#SBATCH --reservation=priority_jobs" fi #echo `env` >> /var/log/slurm_local_submit_attributes.sh.log # DTEAM REGEX="dteam[0-9][0-9][0-9]" USER=`whoami` if [[ ( $USER =~ $REGEX ) ]] ; then # This extracts the queue from the SUDO command and assigns the dteam reservation if required QUEUE=$(echo $SUDO_COMMAND |awk -F'-q ' '{print $2}' | sed 's/ -n.*$//g' 2>&1) if [ "$QUEUE" == "cscs" ]; then echo "#SBATCH --reservation=priority_jobs" fi fi #if [[ ( $USER =~ $REGEX ) ]] ; then # echo "#SBATCH --reservation=dteam" #fi USERDN=$(echo $SUDO_COMMAND |awk -F'-u ' '{print $2}' | sed 's/ -r.*$//g') HN=$(hostname -s) COMMENT="\"$HN,$USERDN\"" echo "#SBATCH --workdir=$HOME" #echo "#SBATCH --comment=$(hostname -s),${USERDN}" #echo "#SBATCH --comment=$(hostname -s)" echo "#SBATCH --comment=$COMMENT" #if [ -n "$GlueHostMainMemoryRAMSize_Min" ] #then # echo "#SBATCH --mem=$GlueHostMainMemoryRAMSize_Min" #fi - The file

/usr/local/bin/sacctmust point to/usr/local/sacct# ls -lah /usr/local/bin/sacct lrwxrwxrwx 1 root root 14 Jul 12 15:23 /usr/local/bin/sacct -> /usr/bin/sacct

- Please be sure that

mungeis up and running, otherwise start it:# service munge start

Customisations

Job Working Directory

By default the job wrapper creates a directory for the job in the home directory of the user (e.g./home/wlcg/prdcms20/home_cre01_847961460/CREAM84796146). If the user doesn't change to $TMPDIR it will run the whole job there. Because we want jobs to run within $TMPDIR (on GPFS) we need to change the following file on the Worker Nodes /etc/glite/cp_1.sh

#!/bin/bash

# cp_1.sh: this is _sourced_ by the CREAM job wrapper

# This script should choose where to do a 'cd' before the job starts

# This is the default

#TIMESTAMP=$(date +%Y%m%d)

export TMPDIR="/gpfs/tmpdir_slurm/${SLURM_SUBMIT_HOST}/${SLURM_JOB_ID}"

export MYJOBDIR=${TMPDIR}

mkdir -p ${TMPDIR}

cd ${TMPDIR}

This file is sourced by CREAM job wrapper and will put the job to run on the $TMPDIR defined (in this case /tmpdir_slurm/).

APEL parser

The directory/var/log/apel needs to be mounted from the NAS since there is a script that runs after every job finishes (defined in the ServiceLRMS page) and puts information on this directory.

Other customisations

- File transfers between CE and WNs

:

: -

/cream_localsandbox/local directory to the CREAMCE host. Transfers to/from WNs are via scp.

-

- Multiple CREAM CEs:

-

BLAH_JOBID_PREFIXmust be unique and start with 'cr', end with '_' and maximum 6 characters total

-

- shared HOME:

-

/home/egee ->! /gpfs/home/egee

-

- lbcd DNS Round Robin:

- There is an iptables rule to allow lbcd traffic from the DNS servers:

#lbcd port 4330 -A INPUT -s 148.187.0.0/16 -p udp --dport 4330 -m state --state NEW -j ACCEPT

- The

lbcdservice does not start automatically on boot, so it needs to be started by hand once the ARGUS service is working ok.

- There is an iptables rule to allow lbcd traffic from the DNS servers:

Upgrade

- For minor updates, the system can be updated with yum, but making sure yaim is run afterwards:

# yum update --enablerepo=umd*,epel # /opt/glite/yaim/bin/yaim -c -s /opt/cscs/siteinfo/site-info.def -n creamCE -n SLURM_utils -n ARGUS_server # cfagent -q

Monitoring

Nagios

If you open a browser and go to the Phoenix Monitoring Overview page you can see all the pages required to monitor the service. Specially important are the following links:- CSCS hosts in the Nagios DE monitoring page

- Nagios history for CSCS

- SLTOP

- CMS Tiny Panel

- ATLAS Tiny Panel

- LHCb Tiny Panel

Ganglia

In the Phoenix Monitoring Overview page you can find the Ganglia graphs for the CEs.-

/etc/cron.d/CSCS_gangliagenerates the performance and job statistic charts:*/2 * * * * root /root/CSCS_ganglia_graphs/ganglia_mysql_stats.pl &>/dev/null */2 * * * * root /opt/cscs/libexec/gmetric-scripts/ce/gmetric_cream_job_stats.bash 2>&1 >> /dev/null */1 * * * * root /opt/cscs/libexec/gmetric-scripts/ce/gmetric_ce_performance.sh >> /var/log/gmetric_ce_performance.log 2>&1

-

/etc/cron.d/sltop2gangliagenerates the sltop page and puts it intoganglia:/var/www/html/ganglia/sltop.html1-59/2 * * * * root /root/sltop2ganglia/sltop2ganglia.sh >/tmp/sltop2ganglia.out 2>/tmp/sltop2ganglia.err

-

/etc/cron.d/slurmerrors2gangliagenerates the errors page and puts it into ganglia (same location as sltop)1-59/2 * * * * root /root/sltop2ganglia/slurmerros2ganglia.sh >/tmp/slurmerrors2ganglia.out 2>/tmp/slurmerrors22ganglia.err

Self Sanity / revival?

If any of the CREAM services is down, the safest thing to do is this:- Check the system logs and look for errors.

- Make sure that all filesystems are mounted with the correct permissions.

- Stop the services with the command

grid-service2 stop - Wait a minute or two and start the services again

grid-service2 start - Make sure all services are up and running by checking the logs.

Other?

Internal glite monitoring

The cream ce's have an internal monitoring which may chose to disable submission if limits are exceeded. Limits can be found in the following filecat ./etc/glite-ce-cream-utils/glite_cream_load_monitor.conf # Thresholds for glite_cream_load_monitor # -1 means no limit # Load1 = 40 Load5 = 40 Load15 = 20 MemUsage = 95 SwapUsage = 95 FDNum = 500 DiskUsage = 95 FTPConn = 150 FDTomcatNum = 800 ActiveJobs = -1 PendingCmds = -1 16/10/14 16:51:58This can be checked from the UI, e.g.

glite-ce-service-info cream03.lcg.cscs.ch Interface Version = [2.1] Service Version = [1.16.2 - EMI version: 3.6.0-1.el6] Description = [CREAM 2] Started at = [Wed Oct 15 09:51:25 2014] Submission enabled = [NO] Status = [RUNNING] Service Message = [SUBMISSION_ERROR_MESSAGE]->[Threshold for Swap Usage: 95 => Detected value for Swap Usage: 99.99%

Manuals

- Service Reference Card

- System Administrator Guide for CREAM for EMI-3 release (official)

- PAP-admin cli manual

- GLExec official TWiki: https://www.nikhef.nl/pub/projects/grid/gridwiki/index.php/GLExec

- ARGUS official TWiki: https://twiki.cern.ch/twiki/bin/view/EGEE/AuthorizationFramework

Issues

Information about issues found with this service, and how to deal with them.Issue1

Sometimes, when the permissions of/etc/sudoers and /var/lib/tomcat5/webapps are not correct problems might arise when running yaim or when starting up the services. For example, a typical issue of this kind is when you run yaim and the directory /var/lib/tomcat5/webapps/ce-cream is not created or is empty.

Please, make sure that the user tomcat and the group tomcat can write to the /var/lib/tomcat5/webapps directory and that /etc/sudoers permissions are root.root 440.

$ ls /var/lib/tomcat5/webapps/ -lha total 5.5M drwxrwxr-x 3 root tomcat 4.0K May 25 17:48 . drwxrwxr-x 6 root root 4.0K May 23 17:12 .. drwxr-xr-x 7 tomcat tomcat 4.0K May 25 17:48 ce-cream -rw-r--r-- 1 root root 5.4M May 25 17:48 ce-cream.war $ ls /etc/sudoers -lh -r--r----- 1 root root 1.2K May 23 16:34 /etc/sudoers

Issue2

When recreating the cream sandbox problems could arise. Amongh others, the problem of detecting the lifetime of the proxy for certain users has happened at least once. Check this page: https://wiki.chipp.ch/twiki/bin/view/LCGTier2/IssueProblemDetectLifetimeProxyIssue3

When installing the machine, make sure it is updated, as some important elements of the system (such as sudo) can make a CREAM installation invalid. For instance, it is known that the following version of sudo package doesn't work with EMI CREAM:sudo-1.6.9p17-5.el5

Issue4

If you get the following message when running a job (glite-ce-job-status -L2):Cannot move ISB (retry_copy ${globus_transfer_cmd} gsiftp://ppcream01.lcg.cscs.ch/var/local_cream_sandbox/dteam/_DC_com_DC_quovadisglobal_DC_grid_DC_switch_DC_users_C_CH_O_ETH_Zuerich_CN_Miguel_Angel_Gila_Arrondo_dteam_Role_NULL_Capability_NULL_dteam010/44/CREAM443806849/ISB/ui.lcg.cscs.ch-1109131020156690000_remote_command.sh file:///tmpdir_pbs/59.pplrms02.lcg.cscs.ch/CREAM443806849/ui.lcg.cscs.ch-1109131020156690000_remote_command.sh): error: globus_ftp_client: the server responded with an error530 530-Login incorrect. : globus_gss_assist: Error invoking callout530-globus_callout_module: The callout returned an error530-an unknown error occurred530 End.]

Please, make sure that the TIME AND DATE of all machines involved in the transfer (CREAM, WN, LRMS and, above all ARGUS) are set correctly and the service ntpd set to autostart. If the timestamp of the system is incorrect fetch-crl cannot work well and consequently, all gridftp transfers will fail.

Issue5

There has been issues with Cream-Argus interactions, reported by CMS. Only a percentage (5%) of the jobs is affected, but there might be a high change that this is a bug in Argus. See ggus05 Apr 2013 08:26:10,094 ERROR org.glite.ce.commonj.authz.argus.ArgusPEP (ArgusPEP.java:241) - (TP-Processor43) Missing property local.user.id java.lang.IllegalArgumentException: Missing property local.user.idTwo actions were taken:

- Increase verbosity on Argus responses, from ERROR to INFO in /etc/argus/pepd/logging.xml

<root> <level value="INFO" /> <appender-ref ref="PROCESS" /> </root>No need to reboot the service - Disabled the Argus Cache (it may have a bug) in /etc/argus/pepd/pepd.ini

[PDP] pdps = http://localhost:8152/authz maximumCachedResponses = 0

A restart is needed/etc/init.d/argus-pepd restart

Issue6

Creams do not publish the correct OS version. This was a result of not having specified the version in the node definition. As a result Yaim would use the operating system version from site-info.def Ensure the following two lines a present in /opt/cscs/siteinfo/nodes/creamXX.lcg.cscs.chCE_OS_RELEASE="6.3" CE_OS_VERSION="Carbon"

Issue7

A cream machine other than cream01 is publishing available CPUs. The fix is already in CFengine, but on some systems (cream03) it doesn't want to work. The fix is to manually zero the entries:Oct 16 18:21 [root@cream03:/usr/lib]# grep 'GlueSubCluster.*CPU' /var/lib/bdii/gip/ldif/static-file-Cluster.ldif GlueSubClusterPhysicalCPUs: 0 GlueSubClusterLogicalCPUs: 0

Issue8

Jobs requesting more than a single core would only be allocated one core. This was resolved in blah-parser-client version 1.20.4 http://www.eu-emi.eu/releases/emi-3-monte-bianco/updates/-/asset_publisher/5Na8/content/update-11-02-12-2013-v-3-6-1-1#BLAH_v_1_20_4/usr/libexec/slurm_submit.shIn the JDL they field that specifies the number of cores is

SMPGranularity

Issue with hone jobs not running

Certs for hone jobs were being rejected, the fix was to upgrade the packagesbouncycastle-mailas well as

voms-api-java3then restart tomcat. It is ok to restart the tomcat daemon with the cream in production.

HowTo

How to certificates

Sometimes, when new certificates arrive, their keys are not in the correct format:# head -1 hostkey-pk8.pem -----BEGIN PRIVATE KEY----- # tail -1 hostkey-pk8.pem -----END PRIVATE KEY-----When the right header/footer has to be:

# head -1 hostkey.pem -----BEGIN RSA PRIVATE KEY--- # tail -1 hostkey.pem -----END RSA PRIVATE KEY-----This happens even if the certificates do not have the

-pk8 at the end. So, in order to convert from pk8 to pk1, we need to issue the following commands:

# mv hostkey.pem hostkey-pk8.pem # openssl rsa -in ./hostkey-pk8.pem -out ./hostkey.pem writing RSA keyOnce you are sure that the keys are in pk1 format, you can proceed to update the certificates. The normal procedure to update the certificates on a CREAM-CE machine is the following:

- Shutdown all the services.

- Update the certificates in

/etc/grid-security - Run YAIM

- Run CFENGINE

- Run

grid-service restart

- Replace

/etc/grid-security/hostcert.pemand/etc/grid-security/hostkey.pemwith the new certificates, taking care of the owner and file permissions. - Replace

/etc/grid-security/tomcat-cert.pemand/etc/grid-security/tomcat-key.pemwith the/etc/grid-security/hostcert.pemand/etc/grid-security/hostkey.pemmaking sure that the owner and permissions are OK. - To the exact same thing as the last point in

/home/glite/.certs/ - Restart the grid services

grid-service restart

/etc/grid-security/ and /home/glite/.certs/ have to be these:

# ls -lha /home/glite/.certs total 16K drwxr-xr-x 2 root root 4.0K Jan 24 16:04 . drwxr-xr-x 5 root root 4.0K Jan 24 09:35 .. -r--r--r-- 1 glite glite 1.9K Jan 25 12:00 hostcert.pem -r-------- 1 glite glite 1.7K Jan 24 16:04 hostkey.pem # ls -lha /etc/grid-security/ total 280K drwxr-xr-x 7 root root 4.0K Jan 24 16:04 . drwxr-xr-x 122 root root 12K Jan 25 08:04 .. -rw-r--r-- 1 root root 391 Dec 13 12:04 admin-list drwxr-xr-x 2 root root 40K Jan 25 12:42 certificates drwxr-xr-x 2 root root 4.0K Jan 25 12:11 certs_2011 -rw-r--r-- 1 root root 2.5K Nov 11 15:34 grid-mapfile -rw------- 1 root root 20 Nov 11 15:34 gridftp.conf drwxr-xr-x 2 root root 160K Jan 25 11:07 gridmapdir -rw-r--r-- 1 root root 2.4K Nov 11 15:34 groupmapfile -rw-r--r-- 1 root root 67 Nov 11 15:34 gsi-authz.conf -rw-r--r-- 1 root root 302 Nov 11 15:34 gsi-pep-callout.conf -r--r--r-- 1 root root 1.9K Jan 24 16:04 hostcert.pem -r-------- 1 root root 1.7K Jan 24 16:04 hostkey.pem -rw-r--r-- 1 tomcat root 1.9K Jan 25 13:42 tomcat-cert.pem -r-------- 1 tomcat root 1.7K Jan 25 13:42 tomcat-key.pem drwxr-xr-x 2 root root 4.0K May 10 2011 voms -rw-r--r-- 1 root root 2.5K Nov 11 15:34 voms-grid-mapfile drwxr-xr-x 10 root root 4.0K Sep 8 11:26 vomsdir

How to ban user

If we need to ban a user from using resources authorized by Argus (such as CREAM-CEs), we need to do the following:- Find the DN of the user's certificate. This is usually found in

/var/log/messages# zgrep Pablo /var/log/cream/glite-ce-cream.log.9* /var/log/cream/glite-ce-cream.log.9:03 Sep 2012 23:57:22,340 INFO org.glite.ce.cream.delegationmanagement.DelegationPurger (DelegationPurger.java:72) - (TIMER) deleted expired delegation [delegationId=cd2bbb676d35e56473c34a579476f2dca193f317] [DN=/DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=users/C=CH/O=ETH Zuerich/CN=Pablo Fernandez] [localUser=atlas004] [ExpirationTime=2012-09-03 14:24:17.0]

- Run the ban commands:

# pap-admin ban subject "/DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=users/C=CH/O=ETH Zuerich/CN=Pablo Fernandez" # /etc/init.d/argus-pdp reloadpolicy; /etc/init.d/argus-pepd clearcache

- Now the user is banned.

# pap-admin lp |head -n 10 default (local): resource ".*" { action ".*" { rule deny { subject="CN=Pablo Fernandez,O=ETH Zuerich,C=CH,DC=users,DC=switch,DC=grid,DC=quovadisglobal,DC=com" } } }

ban by un-ban

# pap-admin un-ban subject "/DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=users/C=CH/O=ETH Zuerich/CN=Pablo Fernandez"

# /etc/init.d/argus-pdp reloadpolicy; /etc/init.d/argus-pepd clearcache

# pap-admin lp |head -n 10

default (local):

resource "http://authz-interop.org/xacml/resource/resource-type/wn" {

obligation "http://glite.org/xacml/obligation/local-environment-map" {

}

action "http://glite.org/xacml/action/execute" {

rule permit { vo="cms" }

rule permit { vo="atlas" }

How to Drain a CreamCE

There are two steps needed to disallow new jobs to get in:- Publish the queues in the CreamCE resource-bdii as Draining, by modifying it in cfengine in

/var/lib/bdii/gip/plugin/glite-info-dynamic-cefor the specific CE - Disable the submissions from ui:

glite-ce-disable-submission cream02.lcg.cscs.ch

How to track a job knowing its URL starting from WMS output

When a Grid job raises some issues and it is needed to be tracked starting from the output given by a WMS job submission the following steps can be followed on a CREAM CE:- find the LRMS (Slurm in our case) job id (lrmsID) looking for it in blah log files starting from the job URL as given by WMS job submission output

- query

slurmaccording to this job id to gather information about the job status, which WN is running (or run) on, etc

[root@cream03:~]# cd /var/log/cream/accounting/

[root@cream03:accounting]# grep 'https://wmslb01.grid.hep.[...]' blahp.log-20131205

"timestamp=2013-12-05 09:25:48" "userDN=/O=GermanGrid/OU=DESY/CN=[...]"

"userFQAN=/hone/Role=NULL/Capability=NULL" "ceID=cream03.lcg.cscs.ch:8443/cream-slurm-other"

"jobID=https://wmslb01.grid.hep.ph.ic.ac.uk:9000/PxqWzJiKXFSIXyI94grsfg" "lrmsID=809032"

^^^^^^^^^^^^^

"localUser=7499" "clientID=cre03_207080303"

[root@cream03:accounting]# sacct -j 809032

JobID JobName Partition Account AllocCPUS State ExitCode

------------ ---------- ---------- ---------- ---------- ---------- --------

809032 cre03_207+ other hone 1 RUNNING 0:0

[root@cream03:accounting]# scontrol show job 809032

JobId=809032 Name=cre03_207080303

UserId=honeprd(7499) GroupId=hone(4005)

Priority=2677 Account=hone QOS=normal

JobState=RUNNING Reason=None Dependency=(null)

Requeue=1 Restarts=0 BatchFlag=1 ExitCode=0:0

RunTime=01:22:53 TimeLimit=1-12:00:00 TimeMin=N/A

SubmitTime=10:25:48 EligibleTime=10:25:48

StartTime=10:25:48 EndTime=Tomorr 22:25

PreemptTime=None SuspendTime=None SecsPreSuspend=0

Partition=other AllocNode:Sid=cream03:10873

ReqNodeList=(null) ExcNodeList=(null)

NodeList=wn03

BatchHost=wn03

NumNodes=1 NumCPUs=1 CPUs/Task=1 ReqS:C:T=*:*:*

MinCPUsNode=1 MinMemoryCPU=2000M MinTmpDiskNode=0

Features=(null) Gres=(null) Reservation=(null)

Shared=OK Contiguous=0 Licenses=(null) Network=(null)

Command=/tmp/cre03_207080303

WorkDir=/home/wlcg/honeprd

Comment=cream03,/O=GermanGrid/OU=DESY/CN=[...]

| ServiceCardForm | |

|---|---|

| Service name | CreamCE |

| Machines this service is installed in | cream[01,02,03,04] |

| Is Grid service | Yes |

| Depends on the following services | lrms, NAS, gpfs |

| Expert | Gianni Ricciardi |

| CM | CfEngine |

| Provisioning | Razor |

| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

CREAM_CE_Service.png | r2 r1 | manage | 130.5 K | 2013-11-20 - 01:02 | MiguelGila |

Topic revision: r46 - 2014-11-25 - MiguelGila

Ideas, requests, problems regarding TWiki? Send feedback